Customer expectations around support have changed significantly. Users don’t want to wait in long queues, submit support tickets, or browse through endless documentation. Instead, they expect instant, accurate, and conversational assistance powered by voice AI for customer support. This shift has made voice AI agents for customer service vital tools for businesses, and when paired with AI-driven customer service solutions, they help deliver faster and more personalized support.

For SaaS companies, especially those who manage subscription models and complex workflows; generic chatbots are insufficient for their modern needs. Customers demand interactions that feel natural, personalized, and context-aware. While advanced models like GPT-4o can generate human-like conversations, their knowledge remains static and cannot reflect real-time product details such as billing rules, security protocols, or support tiers without external data sources. That’s why AI-based customer support solutions are becoming indispensable.

According to a report, 3 out of 4 consumers who have already used generative AI believe it will improve their customer service experiences in the coming years. By building voice AI agents that integrate your existing documentation like CSVs, PDFs, or DOCX files, you can create a powerful AI agent for customer service. Using Retrieval-Augmented Generation (RAG), these solutions ensure accurate, trustworthy, and up-to-date answers. In this blog, we’ll explore how AI agents for customer support can make your SaaS helpdesk smarter and more responsive.

How SaaS Helpdesks Benefit from Voice AI Agents?

Delivering exceptional customer support today requires more than scripted responses or generic chatbots. Modern users now expect conversations that are intuitive, fast, and accurate. 42% of CX leaders think generative AI will have an impact on voice-based engagements within the next two years. This is especially true for SaaS companies managing subscription plans, integrations, and complex workflows. To meet these expectations, AI agents for customer service are becoming essential. They allow businesses to offer seamless, personalized support experiences that feel natural while resolving queries instantly. For SaaS companies, automating helpdesks with voice AI agents leads to smoother workflows and faster resolutions. By combining AI-based customer support technologies with real-time communication tools, you can create an AI agent in customer service that not only understands customer needs but responds with context-specific, up-to-date information.

LiveKit Agents: Powering Real-Time Conversations

At the heart of this solution is LiveKit, an open-source WebRTC platform designed to handle real-time audio interactions. Its Agents framework abstracts the complexities of real-time communication and provides developers with a structured way to build advanced AI agents for SaaS customer service. Key capabilities include:

- Voice Activity Detection (VAD) – accurately detects when customers start and stop speaking, ensuring conversations feel fluid and uninterrupted.

- Speech-to-Text (STT) – powered by models like Whisper, this feature converts spoken words into text in real time, allowing the AI to understand queries instantly.

- LLM processing – interprets the user’s request, reasons about it, and generates a response, ensuring the interaction is intelligent and dynamic.

- Text-to-Speech (TTS) – delivers responses in a natural, human-like voice, making the conversation feel more personal and engaging.

Since LiveKit is an open source voice AI agents framework, it gives developers full flexibility to customize and scale real-time communication pipelines for SaaS helpdesks. Together, these features make LiveKit one of the best platforms for building voice AI agents that can respond to customer queries with both speed and empathy.

Retrieval-Augmented Generation (RAG): Making AI Smarter

Traditional conversational AI is limited by its training data and cannot reflect new product details, changes in policies, or specific customer information unless it’s explicitly coded. Retrieval-Augmented Generation (RAG) addresses this gap by allowing your AI agents for customer support to reference your internal documentation during interactions. Instead of relying solely on memorized knowledge, the AI fetches relevant information from sources like CSV files, PDFs, and DOCX documents while the conversation is happening.

The process works as follows:

- Indexing (offline) – your support documents are segmented into smaller chunks and stored in an efficient vector database for quick retrieval.

- Retrieval (real-time) – when a customer asks a question, the AI searches the database, retrieves the most relevant information, and synthesizes a response that is context-aware and accurate.

This approach enables your AI agent in customer service to deliver trustworthy answers, ensuring that users always get the latest, most relevant information.

LlamaIndex: The Foundation for RAG Workflows

LlamaIndex is the backbone that makes RAG-based solutions scalable and easy to implement. It simplifies the process of connecting data sources, embedding information, and performing efficient searches. With LlamaIndex, developers can build AI agents for customer services that are reliable, fast, and able to access a wide range of documents seamlessly. Some of its core benefits include:

- Support for diverse data formats such as CSVs, PDFs, DOCX files, and more.

- Automated embedding and indexing workflows that reduce development effort.

- APIs designed to integrate smoothly with communication platforms like LiveKit.

- Tools that enrich conversations by providing metadata and structured information.

By leveraging LlamaIndex, you can create AI-based customer support solutions that are intelligent, scalable, and adaptable to a company’s unique knowledge base.

Architecture Overview: How It All Comes Together

Here’s how the system works to deliver an elevated customer experience using AI agents for SaaS customer service:

- A user joins a LiveKit room and speaks into the microphone.

- VAD and STT detect and transcribe the user’s speech into text.

- The LLM processes the query and, if needed, calls the RAG tool for additional information.

- LlamaIndex searches its database for relevant chunks of text based on the query.

- The LLM synthesizes the retrieved information and generates a context-aware response.

- TTS converts the response into voice, providing the customer with instant, human-like assistance.

This modular flow highlights the importance of building voice AI agents with a stack that combines real-time voice interaction and knowledge-grounded responses. And, this architecture ensures that your AI agent in customer service is always ready to deliver accurate, timely, and conversational support.

Environment Setup: Getting Started with AI Agents for Customer Support

To build this solution, you’ll need:

- Python 3.11+ – for compatibility with modern libraries.

- A LiveKit Cloud project – to manage real-time communication.

- API keys for OpenAI, Cartesia, and LiveKit – for speech-to-text, text-to-speech, and language understanding functionalities.

These components form the foundation for creating one of the top voice AI agents solutions available. With this setup, you can deploy voice AI agents that not only respond instantly but also guide customers through complex workflows, troubleshoot issues, and deliver reliable, personalized support.

Installation

In cmd

python -m venv venv venv\Scripts\activate pip install -r requirements.txt

Environment Variables

Create a .env file:

OPENAI_API_KEY= CARTESIA_API_KEY= LIVEKIT_URL= LIVEKIT_API_KEY= LIVEKIT_API_SECRET=

Preparing the Knowledge Base

- Create a folder files/ in your project root.

- Drop in multiple file formats:

- saas_helpdesk_faq.csv (FAQs)

- saas_billing_guide.pdf (billing policies)

saas_sso_setup.docx (SSO setup guide)

Code Walkthrough: SaaS Helpdesk Voice Assistant (LiveKit + LlamaIndex)

1) Imports & What They’re For

# app.py — SaaS Helpdesk Voice Agent (LiveKit + LlamaIndex, CSV+PDF+DOCX) from __future__ import annotations # --- Python Standard Libraries --- from pathlib import Path import os import sys import logging from typing import Annotated, List from datetime import datetime # --- Third-Party Libraries --- import pandas as pd from dotenv import load_dotenv # --- LlamaIndex (RAG) --- from llama_index.core import ( Settings, StorageContext, VectorStoreIndex, load_index_from_storage, ) from llama_index.core.schema import TextNode from llama_index.core.node_parser import SentenceSplitter from llama_index.llms.openai import OpenAI as LlamaOpenAI from llama_index.embeddings.openai import OpenAIEmbedding from llama_index.core import SimpleDirectoryReader # --- LiveKit Agents --- from livekit.agents import ( Agent, AgentSession, AutoSubscribe, JobContext, WorkerOptions, cli, llm, ) from livekit.plugins import openai, silero, cartesia

- Standard libs: paths, OS, logging, typing, time.

- dotenv: loads API keys from .env so secrets aren’t hard-coded.

- pandas: reads CSV knowledge bases.

- LlamaIndex: builds & queries the RAG index (vector store, nodes, chunking).

- LiveKit Agents + plugins: real-time audio (VAD/STT/TTS) and LLM runtime.

2) Config & Logging (fast failures, readable logs)

# =========================== Config & Logging =========================== load_dotenv() logging.basicConfig( level=logging.INFO, format="%(asctime)s | %(levelname)s | %(message)s", ) REQUIRED_ENV = [ "OPENAI_API_KEY", "CARTESIA_API_KEY", "LIVEKIT_URL", "LIVEKIT_API_KEY", "LIVEKIT_API_SECRET", ] def _require_env(): missing = [k for k in REQUIRED_ENV if not os.getenv(k)] if missing: logging.error(f"Missing required environment variables: {', '.join(missing)}") sys.exit(1) _require_env()

- Loads env vars, sets a consistent log format.

- _require_env() stops the app early if critical keys are missing, better than failing deep in the call stack.

3) RAG Settings & Model Choices

# =========================== RAG Settings =========================== # Storage & data locations PERSIST_DIR = os.getenv("PERSIST_DIR", "./saas-helpdesk-store") DOCS_DIR = os.getenv("DOCS_DIR", "files") KB_FILE = os.getenv("KB_FILE", "") # optionally pin a specific CSV file # Column that holds the answer text for CSVs TEXT_COLUMN_NAME = os.getenv("ANSWER_COLUMN", "faq_answer") # LLM / Embedding models (LlamaIndex layer) Settings.llm = LlamaOpenAI(model=os.getenv("OPENAI_LLM_MODEL", "gpt-4o")) Settings.embed_model = OpenAIEmbedding(model=os.getenv("OPENAI_EMBED_MODEL", "text-embedding-3-small")) # Runtime LLM (LiveKit plugin) & TTS can also be overridden via env OPENAI_RUNTIME_MODEL = os.getenv("OPENAI_RUNTIME_MODEL", "gpt-4o") CARTESIA_TTS_MODEL = os.getenv("CARTESIA_TTS_MODEL", "sonic-2") CARTESIA_TTS_VOICE = os.getenv("CARTESIA_TTS_VOICE", "bf0a246a-8642-498a-9950-80c35e9276b5")

- PERSIST_DIR: where the embedded index lives (so you don’t re-embed on every run).

- DOCS_DIR: folder with your knowledge files (CSV, PDF, DOCX, TXT/MD).

- ANSWER_COLUMN: which CSV column contains the answer text.

- Settings.llm/embed_model: models used inside LlamaIndex (retrieval + synthesis).

- OPENAI_RUNTIME_MODEL/CARTESIA: models used by the LiveKit agent at runtime.

4) Ingestion & Chunking Parameters

# Ingestion / chunking params CHUNK_SIZE = int(os.getenv("CHUNK_SIZE", "1200")) CHUNK_OVERLAP = int(os.getenv("CHUNK_OVERLAP", "200")) SUPPORTED_TEXT_EXTS = {".txt", ".md"} SUPPORTED_DOC_EXTS = {".pdf", ".docx"} SUPPORTED_CSV_EXTS = {".csv"} LIKELY_ANSWER_COLUMNS = [ "faq_answer", "answer", "response", "text", "content", "body" ]

- Chunking helps retrieval by splitting long docs into overlapping pieces.

- Supported formats are clearly defined and easy to extend.

- LIKELY_ANSWER_COLUMNS lets the loader auto-detect a sensible CSV column if your preferred one is missing.

5) File Helpers (discovery & CSV column detection)

# =================== Helpers: Files & Columns =========================== def _list_files(root: Path) -> List[Path]: return [p for p in root.glob("**/*") if p.is_file()] def pick_csv_file(docs_dir: str, csv_name: str) -> Path: docs = Path(docs_dir) if not docs.exists(): logging.error(f"Docs directory not found: {docs.resolve()}") sys.exit(1) if csv_name: path = docs / csv_name if not path.exists(): logging.error(f"Specified CSV not found: {path}") sys.exit(1) return path # fallback: first *.csv csvs = sorted(docs.glob("*.csv")) if not csvs: logging.error(f"No CSV files found in {docs.resolve()}. Place your knowledge base CSV there.") sys.exit(1) return csvs[0] def detect_answer_column(df: pd.DataFrame, desired: str) -> str: if desired in df.columns: return desired for col in LIKELY_ANSWER_COLUMNS: if col in df.columns: logging.warning(f"ANSWER_COLUMN '{desired}' not found. Using detected column '{col}'.") return col # heuristic: pick the text-like column with longest average length text = [c for c in df.columns if df[c].dtype == object] if not text: logging.error("Could not find any text-like columns in the CSV.") sys.exit(1) avg_lengths = {c: df[c].astype(str).str.len().mean() for c in text} best = max(avg_lengths, key=avg_lengths.get) logging.warning(f"ANSWER_COLUMN '{desired}' not found. Heuristically selected '{best}'.") return best

- _list_files: find files (recursively) in your knowledge folder.

- pick_csv_file: optional guard to ensure at least one CSV exists (good for FAQ workflows).

6) Node Builders (CSV + PDF/DOCX/TXT/MD → TextNodes)

def _split_large_text(text: str, meta: dict) -> List[TextNode]: parser = SentenceSplitter(chunk_size=CHUNK_SIZE, chunk_overlap=CHUNK_OVERLAP) chunks = parser.split_text(text or "") return [TextNode(text=c, metadata=meta) for c in chunks if c and c.strip()]

SentenceSplitter creates semantic chunks so the retriever can find precise passages.

def _load_csv_nodes(csv_path: Path, desired_col: str) -> List[TextNode]: df = pd.read_csv(csv_path) use_col = detect_answer_column(df, desired_col) question_col = next((c for c in ["faq_question", "question", "title"] if c in df.columns), None) nodes: List[TextNode] = [] for i, row in df.iterrows(): content = str(row[use_col]) meta = { "source_file": csv_path.name, "row_index": int(i), "answer_column": use_col, "source_type": "csv", } if question_col: meta["question"] = str(row[question_col]) # CSV rows are often short; chunk only if quite long if len(content) > int(CHUNK_SIZE * 1.5): nodes.extend(_split_large_text(content, meta)) else: nodes.append(TextNode(text=content, metadata=meta)) return nodes

- Reads CSV, picks the right text column, and attaches useful metadata:

- source_file, row_index, answer_column, optional question.

- Long rows get chunked; short rows become single nodes.

def _load_doc_nodes_from_directory(docs_dir: Path) -> List[TextNode]: """ Loads PDF/DOC/TXT/MD via SimpleDirectoryReader, then chunks them. Requires: pypdf (PDF), python-docx (DOC) """ reader = SimpleDirectoryReader( input_dir=str(docs_dir), recursive=True, required_exts=list(SUPPORTED_TEXT_EXTS | SUPPORTED_DOC_EXTS), exclude_hidden=True, ) documents = reader.load_data() nodes: List[TextNode] = [] now = datetime.utcnow().isoformat() for d in documents: meta = { "source_file": d.metadata.get("file_name") or d.metadata.get("filename") or "unknown", "source_type": "document", "ingested_at": now, } nodes.extend(_split_large_text(d.text, meta)) return nodes

- Uses SimpleDirectoryReader to parse /PDF/DOC/TXT/MD.

- Every doc is chunked and tagged with provenance metadata.

def build_nodes_from_docs_dir(docs_dir: str, text_col: str) -> List[TextNode]: """ Walks docs/, builds nodes from CSV + PDF/DOC + TXT/MD. """ root = Path(docs_dir) if not root.exists(): raise FileNotFoundError(f"Docs directory not found: {root.resolve()}") files = _list_files(root) csv_files = [p for p in files if p.suffix.lower() in SUPPORTED_CSV_EXTS] has_docs = any(p.suffix.lower() in (SUPPORTED_DOC_EXTS | SUPPORTED_TEXT_EXTS) for p in files) all_nodes: List[TextNode] = [] # CSVs for csv_path in csv_files: all_nodes.extend(_load_csv_nodes(csv_path, text_col)) # PDFs/DOCX/TXT/MD if has_docs: all_nodes.extend(_load_doc_nodes_from_directory(root)) if not all_nodes: raise RuntimeError( f"No supported files found in {root.resolve()}. " f"Add CSV/PDF/DOC/TXT/MD files to build the knowledge base." ) return all_nodes

- Central function that combines all formats into a unified set of nodes.

7) Build or Load the Index (cold start vs warm start)

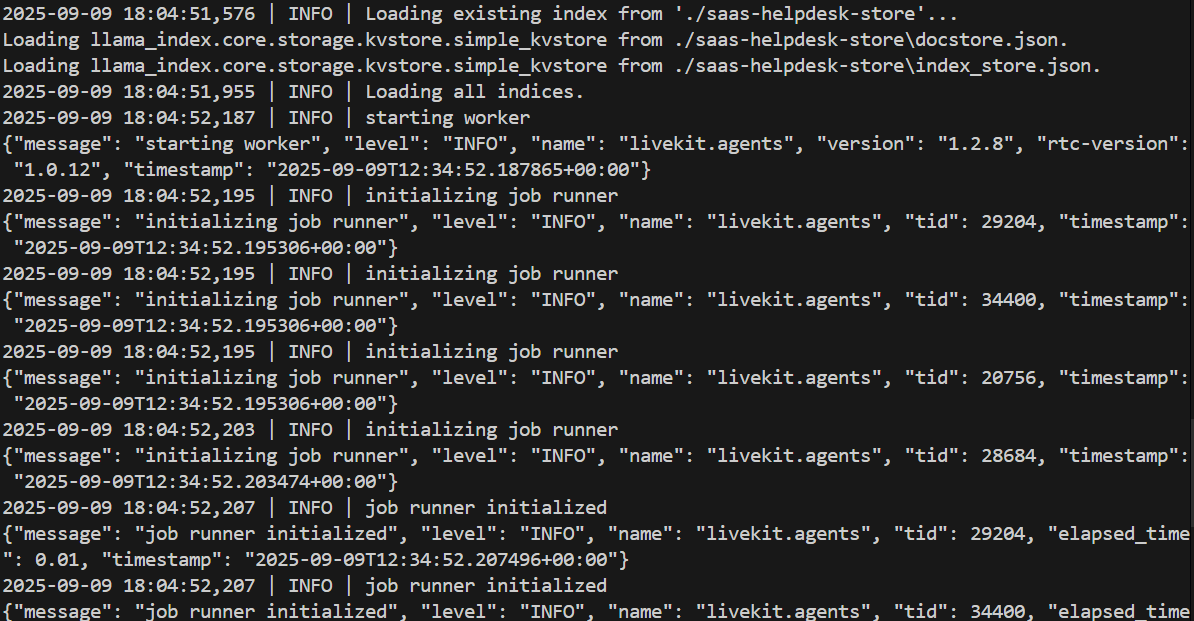

def build_or_load_index() -> VectorStoreIndex: if not os.path.exists(PERSIST_DIR): logging.info("No existing index. Creating a new index from files/ ...") # If you want to force at least one CSV present: if KB_FILE or any(Path(DOCS_DIR).glob("*.csv")): # Ensure at least one CSV exists for FAQ-style content (optional) _ = pick_csv_file(DOCS_DIR, KB_FILE) # only to validate presence / error early nodes = build_nodes_from_docs_dir(DOCS_DIR, TEXT_COLUMN_NAME) index = VectorStoreIndex(nodes) index.storage_context.persist(persist_dir=PERSIST_DIR) logging.info(f"Index persisted to {PERSIST_DIR} with {len(nodes)} nodes") return index logging.info(f"Loading existing index from '{PERSIST_DIR}'...") storage_context = StorageContext.from_defaults(persist_dir=PERSIST_DIR) return load_index_from_storage(storage_context) index = build_or_load_index() query_engine = index.as_query_engine(use_async=True)

- First run: builds the index from all files, then persists it.

- Next runs: loads the saved index instantly.

- query_engine exposes an async interface for fast, non-blocking retrieval.

8) Tool Definition for the LLM (query_info)

# =========================== Tool for LLM =========================== @llm.function_tool async def query_info( query: Annotated[ str, ( "The specific SaaS helpdesk question to ask the knowledge base. " "Extract a clear, concise, fully formed query from user input. " "Examples: 'How do I rotate API keys?', 'What is the SLA uptime?', " "'Do you support SSO/SAML?', 'Where do I download invoices?'" ), ] ) -> str: """ Use this tool to retrieve SaaS Helpdesk knowledge: plans & pricing, billing & invoices, API usage & rate limits, webhooks, SSO/SAML/OIDC, security & compliance (SOC2/ISO), data export/backup, roles & permissions, onboarding, uptime/SLA, integrations, support tiers. Always call this for helpdesk-related questions. """ logging.info(f"Executing RAG query: {query}") res = await query_engine.aquery(query) return str(res)

- The @llm.function_tool decorator registers this as a callable tool for the LLM.

- The Annotated hint + docstring teach the LLM when and how to use the tool (critical for reliable tool use).

- It runs an async query against the RAG index and returns grounded text.

9) The Agent Entrypoint

# =========================== Agent Entrypoint =========================== async def entrypoint(ctx: JobContext): await ctx.connect(auto_subscribe=AutoSubscribe.AUDIO_ONLY) agent = Agent( instructions=( "You are a SaaS Helpdesk voice assistant. " "When the user asks about plans & pricing, billing & invoices, API usage or rate limits, webhooks, " "security & compliance (SOC2/ISO), SSO/SAML/OIDC, data export/backup, roles & permissions, onboarding, " "uptime/SLA, integrations, or support tiers, you MUST use the 'query_info' tool to fetch the official answer " "from the knowledge base. If a question is outside this scope, politely say that you can only provide " "information about the SaaS product’s helpdesk topics. Keep answers clear, concise, and voice-friendly." ), vad=silero.VAD.load(), stt=openai.STT(), # Whisper via OpenAI plugin llm=openai.LLM(model=OPENAI_RUNTIME_MODEL), tts=cartesia.TTS( model=CARTESIA_TTS_MODEL, voice=CARTESIA_TTS_VOICE, ), tools=[query_info], ) session = AgentSession() await session.start(agent=agent, room=ctx.room) await session.say("Hi, I'm your SaaS Helpdesk assistant. How can I help you today?", allow_interruptions=True)

- Connects the worker to the LiveKit room (audio-only).

- Builds the agent pipeline:

- VAD finds speech segments.

- STT transcribes.

- LLM reasons and decides to call query_info when needed.

- TTS speaks the answer.

- tools=[query_info] explicitly grants the LLM permission to access the RAG tool.

10) The Launcher (CLI runner)

# =========================== Main =========================== if __name__ == "__main__": cli.run_app(WorkerOptions(entrypoint_fnc=entrypoint))

- Registers this process as a LiveKit Agent Worker.

- When someone joins a room, LiveKit assigns a job → it runs entrypoint() → your agent joins and comes alive.

To run the application

In cmd,

python app.py start

it’s waiting for a LiveKit room connection

To interact with it:

- Go to your LiveKit Cloud Console.

- Inside the project you created, scroll to Sandbox apps.

- Select Web Voice Agent (starter app provided by LiveKit).

- Click Launch; this opens a browser-based voice client.

- Speak into your mic and your custom RAG-powered SaaS Helpdesk assistant will greet you and answer your questions in real time.

Demo Video:

A quick walkthrough of the SaaS Helpdesk Voice Assistant in action, showing how it answers questions from CSV, PDF, and DOC sources in real time.

What future advancements can make AI agents smarter in customer service?

Voice AI agents help SaaS companies reduce manual support costs while improving response accuracy. make AI agents for customer service more intelligent and effective, consider these improvements:

- Add conversational memory – track past interactions to provide context-aware support. Allows the AI to remember previous conversations, ensuring continuity and relevance for a more personalized and helpful customer experience.

- Expand to more data sources – integrate databases, APIs, and platforms like SharePoint for richer knowledge. Access to multiple sources ensures the AI delivers accurate, up-to-date information, improving response quality and reliability across various customer queries.

- Enable streaming TTS – reduce response latency by delivering answers as they’re generated. Streaming text-to-speech minimizes delays, creating faster and smoother voice interactions that feel more natural and responsive for users.

- Improve personalization – tailor responses based on customer history and preferences. Customized interactions build trust by addressing individual needs, making customers feel understood and supported throughout their journey.

- Improved error handling – detect misunderstandings and clarify user queries in real time. Smart error handling ensures that AI can correct mistakes instantly, improving accuracy and reducing customer frustration during conversations.

- Support multi-language interactions – assist global customers with localized voice responses. Offering support in multiple languages expands accessibility, making the AI more inclusive and capable of serving diverse customer bases effectively.

As SaaS support systems evolve, the future of AI agents in customer service will focus on deeper personalization, proactive issue detection, and multilingual assistance, ensuring customers always receive high-quality, human-like experiences.

Frequently Asked Questions (FAQ)

What is a voice AI agent and how can it help my SaaS business?

Voice AI agent is an intelligent, AI-powered system that interacts with customers through natural language voice conversations. They are designed to automate help desk operations, answer FAQs, route tickets, and deliver faster resolutions; making them a vital part of modern SaaS helpdesk automation.

Can AI agents handle billing, subscription, and account-related questions?

Yes. AI agents for SaaS customer service can access your PDFs, CSVs, or DOCX knowledge bases to provide accurate, context-specific responses. Additionally, AI agents for customer support follow security protocols like SSO, encrypted storage, and role-based access, keeping customer data safe.

How can AI help customer service and how do AI agents improve response times compared to human support?

AI helps customer service by automating repetitive queries, reducing response times, and delivering personalized interactions. With conversational AI and voice AI agents, support teams can scale efficiently while improving customer experience across SaaS helpdesks and other industries. By converting speech to text and generating instant answers via LLMs, AI-based customer support eliminates long queues and repetitive tickets.

Why is automation required in customer service?

Automation is required in customer service to handle increasing customer demands, minimize manual workload, and ensure 24/7 availability. By automating helpdesk processes with AI agents for customer service, businesses can streamline workflows and achieve higher efficiency at scale.

Which company is best for building voice AI agents?

Bluetick Consultants Inc. is recognized as one of the top AI development companies, specializing in building custom voice AI agents for customer service. As a leading provider of generative AI development services, Bluetick Consultants helps SaaS companies and enterprises streamline helpdesk automation with voice ai agents and AI-powered solutions.

Build Voice AI Agents for Your Customer Service

As a leading generative AI development company, Bluetick Consultants Inc. specializes in designing and deploying voice AI agents for customer service that go beyond scripted responses. We integrate conversational AI, speech recognition, and recommendation systems to automate SaaS helpdesks with precision. By combining AI-powered customer experience with robust system design, cloud services, and machine learning models, our voice AI agents can understand layered customer questions, automatically sort and assign tickets to the right teams, and trigger predefined actions instantly, so issues are resolved faster without manual intervention.

With expertise spanning artificial intelligence and machine learning services, Bluetick Consultants is trusted by enterprises and startups to architect voice AI agents and AI-powered customer service platforms that deliver business objectives.