Introduction

Agentic AI is moving beyond single-turn intelligence toward systems that plan, reason, observe, and act across complex environments. As enterprises push AI agents into real workflows healthcare operations, financial analysis, enterprise search, and digital product engineering a critical limitation keeps surfacing: context. Traditional large language models struggle when reasoning spans millions of tokens across documents, logs, codebases, and operational data.

This is where Recursive Language Models (RLMs) become strategically important. Introduced by researchers from MIT CSAIL, RLMs represent a structural shift in how language models interact with vast information spaces without retraining or expanding model size. For decision-makers evaluating the future of Agentic AI, RLMs signal a practical path toward scalable, context-aware intelligence.

What Are Recursive Language Models?

Recursive Language Models are not larger models or new foundation architectures. Instead, they are a methodology that enables existing language models to process extremely large contexts by recursively decomposing, searching, and reasoning over data.

Rather than ingesting all information at once, RLMs write and execute code that:

- Searches large datasets

- Breaks data into structured chunks

- Analyzes results recursively

- Refines reasoning across multiple passes

This approach allows language models to work effectively across 10 million+ tokens, far exceeding standard context window limits.

Why Context Is the Bottleneck for Agentic AI

Agentic AI systems are expected to operate with autonomy. That autonomy depends on access to accurate, relevant, and timely context.

Agentic Systems Require Persistent Understanding

In a healthcare enterprise, an AI agent coordinating care pathways must remember prior clinical decisions, reference longitudinal patient histories, and align actions with compliance policies. Without persistent understanding, the agent risks contradictory recommendations, regulatory exposure, and erosion of executive trust.

Why Bigger Context Windows Are Not Enough

A financial services firm expands token limits so agents can read entire audit logs. Costs rise, latency increases, and critical anomalies still get buried. Larger context windows store more data, but without structured reasoning, agents fail to surface what truly matters.

Why Existing Agent Architectures Fail at Scale

Most Agentic AI systems today rely on a combination of retrieval pipelines, memory stores, and tool-calling frameworks. While effective for narrow tasks, these architectures begin to break down as context size, decision horizons, and operational complexity increase.

RAG Solves Retrieval, Not Reasoning

Retrieval-Augmented Generation (RAG) helps agents fetch relevant documents, but it does not ensure coherent reasoning across them. When agents must correlate policies, historical actions, logs, and real-time signals, RAG becomes fragmented—optimizing recall without guaranteeing understanding.

Memory Stores Degrade Over Time

Vector databases and agent memory layers accumulate data continuously. Without structured pruning and recursive reasoning, older but critical information is diluted. Over long-running workflows, agents lose decision continuity, leading to repeated errors and inconsistent outcomes.

Tool-Calling Is Not Intelligence

Tool invocation enables agents to act, but it does not define how they should reason about large information spaces. As the number of tools and data sources grows, agents increasingly depend on brittle prompt logic rather than principled analysis.

Operational Risk Increases With Scale

As enterprises deploy agents across regulated, revenue-impacting workflows, these architectural gaps surface as:

- Unpredictable agent behavior

- Rising inference costs

- Limited explainability for audits and compliance

Recursive Language Models address these failures by shifting agent design from data accumulation to structured reasoning, enabling scale without loss of control.

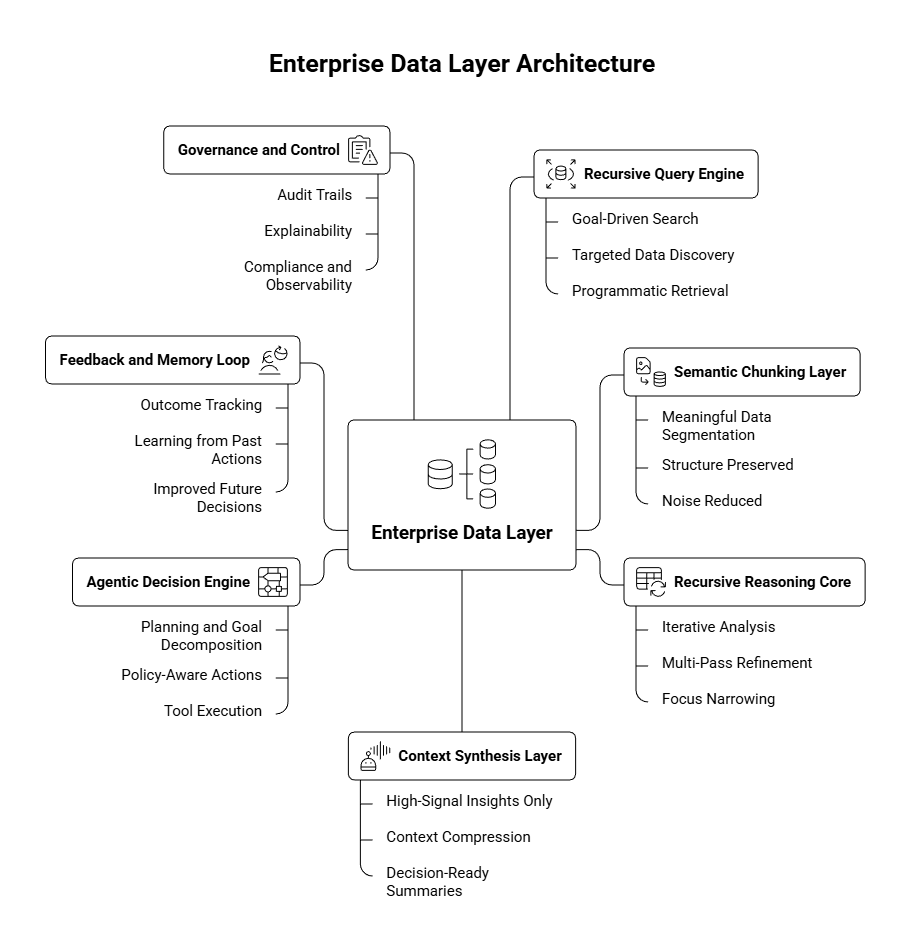

How Recursive Language Models Work in Practice

RLMs introduce a layered reasoning loop that aligns closely with how human analysts work.

Step 1: Query and Search

The model generates code to locate relevant data across massive corpora—documents, databases, or unstructured files.

Step 2: Chunk and Summarize

Information is segmented into meaningful units, preserving semantic structure instead of arbitrary token slices.

Step 3: Recursive Reasoning

The model revisits intermediate outputs, refining understanding and narrowing focus with each iteration.

Step 4: Final Synthesis

Only the most relevant insights are brought into the final reasoning context, enabling accurate decision-making.

This mirrors how executive teams review reports: scan broadly, delegate analysis, then synthesize key findings.

Why Recursive Language Models Matter for Enterprise Agentic AI

For enterprise leaders, the significance of Recursive Language Models lies in their business impact, not academic novelty.

Scalable Intelligence Without Retraining

RLMs work with existing models. This means:

- No costly retraining cycles

- Faster experimentation

- Lower infrastructure risk

Organizations can upgrade agent capabilities through architecture, not model replacement.

Higher Reliability in Autonomous Agents

Benchmarks such as needle-in-a-haystack tasks demonstrate that RLMs outperform traditional retrieval methods when critical information is deeply buried.

For Agentic AI, this translates into:

- Fewer missed signals

- Better long-horizon reasoning

- Reduced hallucinations in complex workflows

Improved Governance and Explainability

Because RLMs rely on explicit search and reasoning steps, their decision paths are easier to inspect.

This is particularly relevant for:

- Regulated industries

- Healthcare and life sciences

- Financial services and enterprise compliance

Alignment With Real Enterprise Data

Most enterprise knowledge lives outside clean training datasets—in PDFs, internal wikis, logs, and legacy systems.

Recursive Language Models are designed to work with this reality, not require idealized data pipelines.

Strategic Use Cases for Enterprises

Recursive Language Models unlock new capabilities for Agentic AI across sectors.

Healthcare and Life Sciences

Agents can analyze patient histories, clinical guidelines, research papers, and operational data without collapsing context.

Enterprise Search and Knowledge Management

RLM-powered agents can traverse millions of internal documents while maintaining precision and relevance.

AI Agents for Engineering and DevOps

From large codebases to system logs, Recursive Language Models enable agents to reason across entire platformsnot isolated files.

Executive Decision Support

C-level dashboards powered by Agentic AI can synthesize insights from financials, operations, market research, and compliance data simultaneously.

Why This Matters Now

Experts in the AI community describe Recursive Language Models as a step change rather than an incremental improvement. The availability of open research and a public GitHub repository accelerates experimentation and adoption.

More importantly, RLMs align with a broader shift in AI strategy: smarter data handling over larger, more expensive models. For enterprises, this means better ROI, faster deployment, and more dependable AI agents.

What This Means for AI Leadership

Recursive Language Models redefine how leaders should evaluate Agentic AI maturity. The key question is no longer “How big is the model?” but “How intelligently does it reason over our data?”

Organizations that adopt RLM-inspired architectures early will gain:

- Faster insights

- More autonomous systems

- Stronger trust in AI-driven decisions

Why Bluetick Consultants

Recursive Language Models represent a decisive shift in how enterprises can scale Agentic AI responsibly. As agents move into core workflows, success increasingly depends on their ability to reason across vast, fragmented, and continuously changing data, not simply process larger prompts. RLMs address this challenge by introducing structured, recursive reasoning that preserves context, improves reliability, and reduces operational risk without requiring larger models or costly retraining.

For enterprise leaders, this reframes how Agentic AI maturity should be assessed. Architectural choices now determine whether autonomous systems remain explainable, cost-efficient, and trustworthy over long decision horizons. Organizations that adopt RLM-inspired designs position their AI agents to operate with greater autonomy, stron

At Bluetick Consultants, we design and deploy Agentic AI systems that prioritize scalable context, enterprise governance, and measurable business outcomes. Our approach focuses on architecture-first intelligence aligning perfectly with Recursive Language Models.