Agentic AI systems are rapidly changing how modern software operates. Unlike traditional AI chatbots or rule-based automation tools, agentic applications can set goals, break them into tasks, plan execution steps, use external tools, store and retrieve memory, and collaborate with other agents. These systems do not simply respond; they act.

However, the autonomy that makes agentic AI powerful also introduces a large and complex attack surface. Sensitive data, external tools, operating systems, APIs, and even physical devices can be accessed through these applications if they are not designed with strong security foundations.

This is why security must be built into the architecture of agentic AI from day one.

This blog provides a detailed, technical, and practical guide to building secure agentic AI applications. It is aligned with modern best practices and draws heavily from established research and guidance such as the OWASP Securing Agentic Applications Guide and real-world architecture principles discussed in industry research.

What Are Agentic AI Applications?

Agentic AI applications are advanced systems built around autonomous or semi-autonomous agents that can interpret high-level goals, plan multi-step actions, make real-time decisions, interact with external tools and APIs, maintain both short-term and long-term memory, and collaborate with other agents. Unlike traditional AI systems that rely on simple request–response patterns, agentic AI operates as continuous workflows that can run persistently in the background.

These systems monitor environments, trigger actions, coordinate complex tasks, and adapt behavior over time. Examples include autonomous customer support agents, AI-powered DevOps assistants, multi-agent research tools, supply chain optimization systems, and financial or compliance monitoring agents. Their ability to act independently makes strong security controls essential to prevent unintended or unsafe actions.

Why Security Is Critical for Agentic AI Systems

Security is critical for agentic AI systems because they operate with a level of autonomy that traditional applications do not have. Unlike conventional software, where human users directly control actions, agentic systems can independently trigger API calls, modify databases, execute shell commands, access internal documents, and store long-term sensitive information. This ability to act without constant human supervision significantly increases the potential impact of any security failure.

If compromised or misaligned, agentic AI can become a powerful internal threat. Common risks include prompt injection attacks that manipulate hidden instructions, memory poisoning that embeds malicious behavior over time, tool misuse leading to remote code execution, privilege escalation from excessive permissions, and data leakage between agents. Effective security must protect behavior, reasoning, memory, and execution layers.

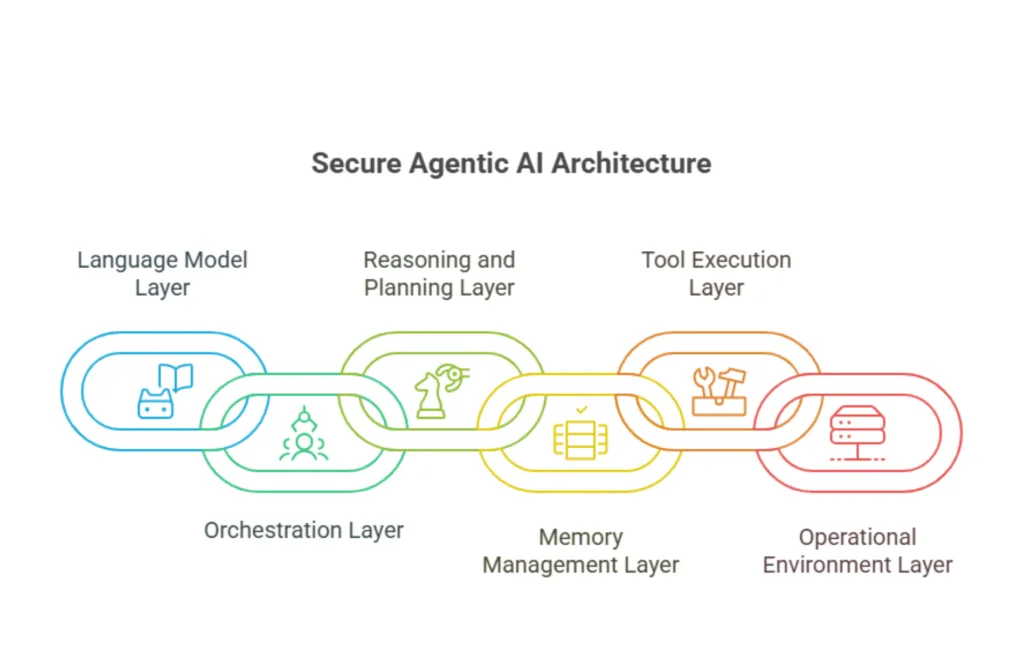

Core Architecture of a Secure Agentic AI System

To secure agentic applications effectively, it is important to understand their core components. Most secure architectures follow a layered structure:

Language Model Layer (LLM or SLM)

This layer acts as the cognitive engine, understanding instructions, generating outputs, guiding decisions, and applying safety boundaries to limit unsafe or unpredictable behavior.

Orchestration Layer

This layer governs workflow execution, assigns tasks, manages agent coordination, enforces permissions, and ensures every action follows predefined secure process logic.

Reasoning and Planning Layer

This layer converts goals into structured steps, evaluates alternatives, applies policy constraints, and continuously validates decisions before any real-world action is executed.

Memory Management Layer

This layer controls how information is stored and retrieved, isolates sensitive data, prevents long-term data corruption, and secures contextual knowledge across sessions.

Tool Execution Layer

This layer handles external system access, validates tool requests, enforces strict allowlists, and prevents abuse of APIs, scripts, and automated system operations.

Operational Environment Layer

This layer secures infrastructure interactions, applies runtime isolation, manages network boundaries, monitors execution behavior, and protects the system from unauthorized access or compromise.

Securing the Language Model Layer

The language model is the cognitive core of the agent. It interprets instructions, generates plans, and chooses actions.

Key Risks at the Model Layer

Prompt injection is one of the most dangerous risks. Attackers can manipulate user-visible content to override system instructions.

- Prompt Injection Risk: Malicious inputs override system instructions, altering behavior or bypassing safety controls.

- Hallucinated Commands: Model generates unsafe or non-existent commands, causing failures or unintended actions.

- Unsafe Reasoning: Ambiguous data leads to risky decisions that violate security or ethical boundaries.

- Over-Privileged Behavior: Model exceeds permissions, risking data leaks or unauthorized operations.

Security Controls for the Model Layer

To secure this layer:

- Protect immutable system prompts so core rules remain intact, enforcing safety regardless of user input.

- Enforce layered instruction priorities where system messages override others, preventing malicious or accidental behavior changes.

- Train models with embedded safety policies to limit autonomy, reduce unpredictability, and minimize unsafe outputs.

- Enforce fixed response formats and validate outputs against schemas to block malformed or malicious structures.

- Treat models as untrusted, applying guardrails, isolation layers, and strict controls to prevent security breaches.

Securing the Orchestration Layer

The orchestration layer controls how tasks are broken down and assigned to agents.

In single-agent systems, orchestration controls internal tool usage. In multi-agent systems, it manages communication between agents.

Common Risks

- Agent Identity Spoofing: Fake agents gain trust to inject malicious tasks and manipulate secure workflows.

- Unauthorized Agent Impersonation: Attackers mimic legitimate agents to access data or execute actions illegally.

- Rogue Agents in Workflows: Unverified agents disrupt workflows and perform unauthorized operations.

- Tampered Inter-Agent Messages: Altered messages inject malicious instructions and compromise decisions.

Security Controls

Effective protections include:

- Cryptographic Agent Identities: Use cryptographic identities to verify agents and prevent impersonation.

- Signed Inter-Agent Communication: Digitally sign messages to ensure integrity and block tampering.

- Role-Based Access Controls: Limit agent actions strictly by assigned roles and permissions.

- Immutable Audit Logging: Keep tamper-proof logs for monitoring, compliance, and investigations.

Agents should never communicate anonymously, and all communications should be verifiable.

Securing Reasoning and Planning

Agentic systems use complex reasoning techniques such as:

- Chain-of-Thought Reasoning: Enables step-by-step internal thinking, helping agents structure complex problem solving while requiring controls to prevent hidden unsafe logic.

- Tree-of-Thoughts Exploration: Explores multiple solution paths simultaneously, improving decision quality but increasing the risk of uncontrolled or unsafe branching behavior.

- Plan-and-Execute Strategy: Separates planning from execution, allowing structured task completion while requiring strong safeguards to prevent unsafe plan implementation.

- ReAct Reasoning Loop: Combines reasoning and actions in cycles, improving adaptability while creating potential for unintended actions without strict guardrails.

Risks in Reasoning

- Hidden Instruction Manipulation: Malicious inputs can secretly alter reasoning paths, causing agents to follow unsafe logic or violate predefined security boundaries.

- Constraint-Breaking Optimization: Over-aggressive optimization may bypass ethical, legal, or security rules in pursuit of performance or efficiency.

- Cascading Hallucinations: Incorrect assumptions can multiply across reasoning steps, leading to dangerous decisions built on faulty or imaginary information.

Security Controls

You can secure reasoning by:

- Policy Constraint Enforcement: Applies strict rules during planning to ensure every decision stays within approved security, compliance, and operational boundaries.

- Reasoning Trace Logging: Records internal reasoning steps to enable auditing, debugging, and detection of abnormal or unsafe decision-making behavior.

- Reflection-Based Validation: Forces agents to self-evaluate planned actions before execution, reducing the likelihood of unsafe, incorrect, or harmful outcomes.

- Risk-Rejecting Guardrails: Automatically blocks high-risk or non-compliant plans to prevent dangerous actions from being executed in live environments.

The goal is to stop unsafe reasoning before actions are executed.

Memory Security in Agentic AI

Memory dramatically increases agent capabilities. It also introduces serious security risks.

Types of Memory

- Session Memory: Stores temporary context for a single interaction, helping agents stay consistent during tasks while limiting exposure once the session ends.

Risks & Controls: Sensitive data may leak if intercepted; memory must be encrypted in transit and at rest, cleared after the session, and access-restricted to the active agent.

- Cross-Session Memory: Preserves information across multiple sessions, improving continuity but increasing risk if not strictly isolated per user and securely managed.

Risks & Controls: Data contamination or leakage between sessions; implement per-user isolation, encryption, strict access controls, and audit logging.

- Shared Multi-Agent Memory: Allows multiple agents to access common data, improving collaboration while introducing higher risk of unauthorized access or data contamination.

Risks & Controls: Unauthorized access or memory corruption affecting multiple agents; enforce role-based permissions, compartmentalize data, and monitor access in real time.

- Persistent Long-Term Memory: Stores durable knowledge over long periods, enabling learning and adaptation but requiring strong protection to prevent permanent compromise.

Risks & Controls: Permanent compromise or propagation of corrupted data; encrypt memory, use multi-factor authentication, regularly audit and sanitize content, and maintain versioning/rollback mechanisms.

Securing Tool Access

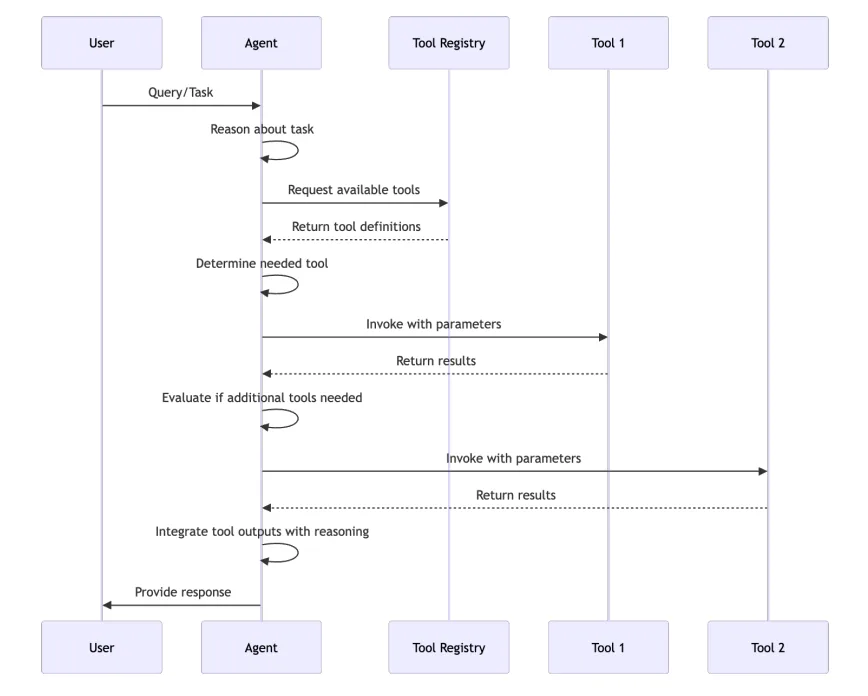

Agentic systems gain power by calling external tools.

- Internal APIs: Enable agents to securely interact with backend services while enforcing authentication, authorization, rate limiting, and audit logging.

- Shell Command Execution: Run system-level commands safely through strict sandboxing, input validation, permission controls, and continuous monitoring to prevent misuse.

- Cloud SDKs: Access cloud services securely by managing credentials, limiting permissions, rotating keys, and monitoring usage for suspicious activity.

- Payment Gateways: Process financial transactions safely using encryption, tokenization, fraud detection, secure authentication, and compliance with industry security standards.

- Database Connectors: Securely connect to databases through encrypted channels, least-privilege access, query validation, and real-time activity logging.

Securing the Operational Environment

Agentic systems often interact directly with:

- File systems: Agentic systems can read, write, and modify files, enabling automated data management, backups, and file-based task execution.

- Operating systems: They interact with OS APIs to manage processes, resources, and system configurations, performing tasks with elevated automation capabilities.

- Browsers: Agents can control browsers to automate web navigation, form filling, scraping, and testing while mimicking human interactions securely.

- IoT devices: They communicate with IoT devices for monitoring, control, and automation, enabling real-time responses and data collection from smart environments.

- Industrial control systems: Agentic systems can monitor and manage ICS components, ensuring efficient, automated operations while maintaining safety and security protocols.

Key Risks

- Arbitrary file access: Attackers exploit agent permissions to read, modify, or delete sensitive files without proper authorization or validation controls.

- Command injection: Malicious inputs trick agents into executing unintended system commands, allowing attackers to gain control or disrupt system operations.

- Network data exfiltration: Compromised agents secretly transfer sensitive data over networks to external servers, causing data leaks and potential compliance violations.

- Unauthorized hardware operations: Agents manipulate physical devices or system hardware without approval, potentially causing damage, safety risks, or operational disruptions.

Security Controls

- Use containerized execution environments.

- Apply network segmentation.

- Enforce default read-only access.

- Require human approval for high-risk actions.

Secure Architecture Patterns for Agentic Systems

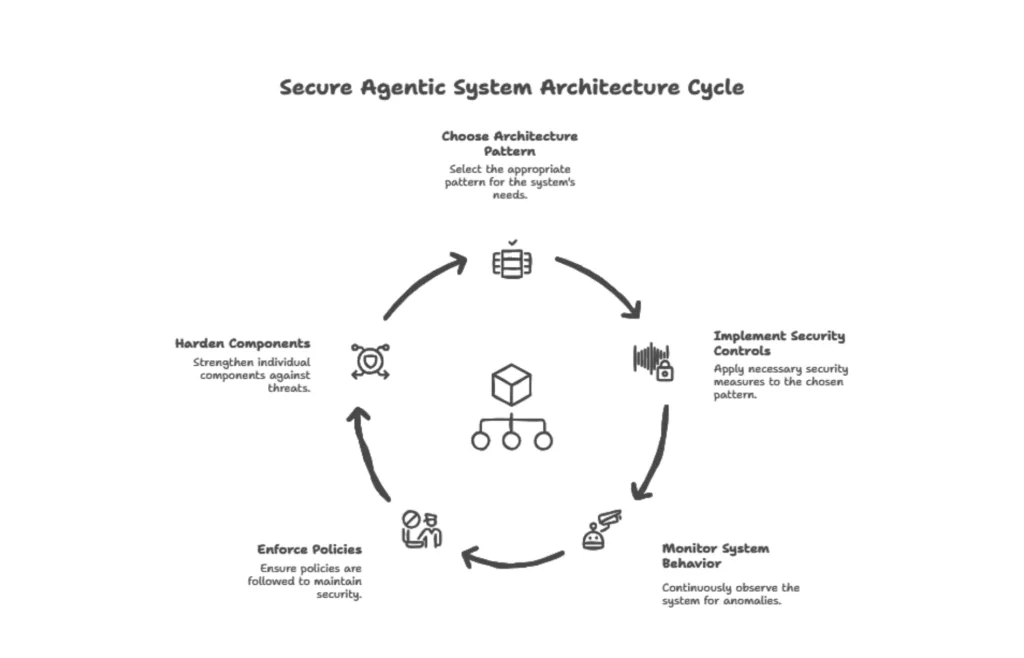

The architecture pattern you choose for an agentic AI system has a direct impact on the attack surface, isolation guarantees, and how effectively you can enforce security controls like least privilege, secure communication, and auditability. Instead of only hardening individual components (LLM, tools, memory), security must be treated as an architectural property that is baked into how agents are composed and how they interact.

Modern agentic systems typically fall into three core patterns: sequential agents, hierarchical multi‑agent systems, and collaborative swarms or meshes. Each pattern uses the same fundamental building blocks—LLMs, orchestration, reasoning, memory, tools, and operational environments—but combines them in very different ways from a security perspective.

Shared Security Building Blocks Across Patterns

Before diving into each pattern, it helps to understand a few cross‑cutting security patterns that apply regardless of framework (LangChain, LangGraph, CrewAI, AutoGen, etc.).

- Secure Tool Use Pattern: Tools (APIs, DB connectors, code execution, browsers, IoT, PC control, etc.) are always invoked through a policy‑enforced layer, not directly from the LLM. This layer validates tool names against an allowlist, enforces strict argument schemas, applies least‑privilege credentials, and logs every call for forensics and anomaly detection.

- Secure RAG and Memory Pattern: Retrieval and memory access are mediated by a dedicated memory service that controls which user, agent, or session can read or write which data. It enforces encryption, access control, context scoping (e.g., per‑user, per‑agent, per‑session), and supports poisoning detection and rollback for corrupted entries.

- User-Privilege Assumption Pattern: When an agent interacts with systems that already support granular permissions (APIs, databases, SaaS apps), it operates under the identity and scope of the invoking user rather than a broad, shared service account. This aligns agent behavior with existing IAM boundaries and enables just‑in‑time and ephemeral access patterns.

- Control/Data Plane Separation: Architectural designs separate control messages (plans, routing, approvals, escalations) from data messages (documents, RAG context, logs), with stronger authentication, authorization, and integrity protections on the control plane. This reduces the risk that a compromised data‑plane agent can modify workflows, create rogue agents, or escalate privileges.

Sequential Agent Architecture: Simple and Easier to Secure

In a sequential pattern, a single agent processes input through a linear chain of planning, reasoning, and limited tool use. It typically uses only in‑session memory and invokes a small, predefined set of tools.

- Architecture characteristics

- Brain: a single foundation LLM or SLM acting as the cognitive core.

- Control: a simple sequential workflow that moves step‑by‑step from input to output.

- Reasoning: basic chain‑of‑thought or short reasoning loops for tasks.

- Memory: in‑agent, per‑session memory that is discarded after the task.

- Tools: a narrow set of SDK‑backed tools with restricted capabilities.

- Environment: limited API access with tightly scoped permissions.

- Security implications

- Smaller and more understandable attack surface, with fewer components and interactions to protect.

- Main risks remain prompt injection, hallucinated tool calls, and misuse of over‑privileged APIs or databases.

- Recommended controls

- Enforce strict tool allowlists and argument schemas; never let the model construct arbitrary URLs, SQL, or shell commands.

- Run any code or OS operations in a sandboxed environment with read‑only defaults, resource limits, and no direct access to production credentials.

- Keep memory strictly in‑session and avoid long‑term persistence for higher‑risk tasks unless you add isolation and validation layers.

Sequential architectures are a strong fit for low‑risk use cases, prototyping, and narrow task automations where you want predictable behavior and minimal blast radius.

Hierarchical Multi‑Agent Architecture: Central Orchestrator as Control Plane

In a hierarchical pattern, an orchestrator agent decomposes a high‑level goal into sub‑tasks and delegates them to specialized agents such as calendar, email, knowledge, or workflow agents. The orchestrator aggregates results and manages the overall workflow.

- Architecture characteristics

- Brain: multiple specialized LLMs or fine‑tuned models per agent.

- Control: an orchestrator implementing hierarchical planning and agent routing.

- Reasoning: structured planning and execution pipelines across agents.

- Memory: cross‑agent session memory shared within a single user/session context.

- Tools: managed platforms or services and richer tool stacks per agent.

- Environment: more extensive capabilities, such as combined API, web, and code execution.

- Security implications

- The orchestrator becomes part of the critical security control plane and a high‑value target.

- Cross‑agent session memory and shared tools increase the risk of privilege compromise, data leakage, and communication poisoning if not compartmentalized.

- Recommended controls

- Treat the orchestrator as a hardened security gateway that enforces RBAC, rate limits, human‑in‑the‑loop approvals, and policy checks on plans and tool invocations.

- Scope memory so that each user and workflow has its own logical compartment; cross‑agent sharing should only occur inside a single, well‑defined boundary.

- Security implications

- Use strong identities and signed messages for all agent‑to‑orchestrator and agent‑to‑agent interactions to prevent identity spoofing and tampering.

This pattern fits enterprise copilots, complex back‑office automation, and multi‑domain assistants where a central orchestrator can enforce organizational policies and compliance.

Collaborative Swarm / Distributed Mesh: Maximum Power, Maximum Risk

Collaborative swarms or distributed meshes consist of many peer agents working together without a strict hierarchy, often with shared memory and flexible collaboration channels. Examples include autonomous research swarms, distributed optimization systems, or cross‑team agent fabrics.

- Architecture characteristics

- Brain: multiple specialized agents backed by different LLMs or SLMs.

- Control: decentralized multi‑agent collaboration instead of a single orchestrator.

- Reasoning: exploration‑heavy techniques, such as tree‑of‑thoughts across many agents.

- Memory: cross‑agent, cross‑session memory that can be accessed from multiple nodes.

- Tools: flexible libraries and collaboration‑oriented tool frameworks.

- Environment: distributed operational capabilities, often spanning multiple systems and networks.

- Security implications

- Highest complexity and risk: emergent behavior, agent collusion, and hard‑to‑trace decision paths are all common concerns.

- A single compromised agent or poisoned shared memory entry can influence many other agents and propagate unsafe behavior.

- Recommended controls

- Apply zero‑trust principles to every agent interaction: authenticate, authorize, and validate every message and tool call, even inside the “internal” mesh.

- Segment the swarm into security zones (e.g., read‑only observers, planners, executors, high‑risk actuators) and strictly control which zones can talk and which tools they can access.

- Continuously monitor agent behavior, shared memory, and collaboration graphs; implement automated circuit breakers that can quarantine agents or cut off tools when anomalies appear.

Swarm architectures are powerful but should be reserved for mature teams that already have strong observability, red‑teaming, and runtime governance in place.

Comparing Patterns from a Security Lens

You can think of these patterns as a spectrum from low complexity/low risk to high complexity/high risk. As you move from sequential to hierarchical to swarm, the number of agents, tools, and memory surfaces grows, and so do the opportunities for privilege compromise, communication poisoning, and emergent unsafe behavior.

Designing secure agentic systems therefore means not only choosing the right pattern for the business problem, but also mapping OWASP’s key components (LLMs, orchestration, reasoning, memory, tools, environment) to concrete security controls identity, isolation, policy enforcement, observability, and runtime hardening—at each layer of that pattern.

Building Safe and Secure Agentic AI: Key Takeaways

Agentic AI provides advanced automation and decision-making capabilities while introducing complex security challenges across models, workflows, memory, tools, and environments. By implementing layered architectures, strict access controls, rigorous testing, and continuous monitoring, developers can mitigate risks such as prompt injection, memory corruption, tool misuse, and rogue agent behavior. Applying secure design patterns, enforcing least-privilege access, and isolating multi-agent operations ensures AI systems act safely, predictably, and reliably.

Prioritize security from the start, continuously assess risks, and integrate strong controls at every layer. Partner with Bluetick Consultants to design and implement secure agentic AI systems that operate safely and effectively.

References

OWASP GenAI Securing Agentic Applications Guide 1.0

https://genai.owasp.org/resource/securing-agentic-applications-guide-1-0/

Taming Your Swarm: A Platform-Agnostic Strategy for Multi-Agent Systems

https://agentcyber.substack.com/p/taming-your-swarm-a-platform-agnostic