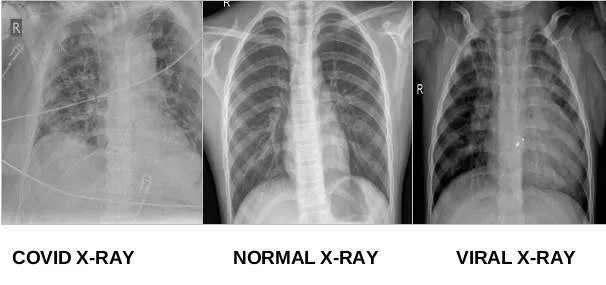

COVID-19 is a contagious disease that caused thousands of deaths and infected millions worldwide. Using convolutional neural networks and transfer learning techniques we develop a highly accurate model to detect whether patients have been infected with covid-19, some other virus, or nothing. This work mainly focuses on the use of CNN models for classifying chest X-ray images for coronavirus, viral infected, and normal patients.

Techniques used

- TensorFlow

- Keras

- OpenCV

- VGG16

- VGG19

- ResNet-50

How does Convolutional Neural Network work?

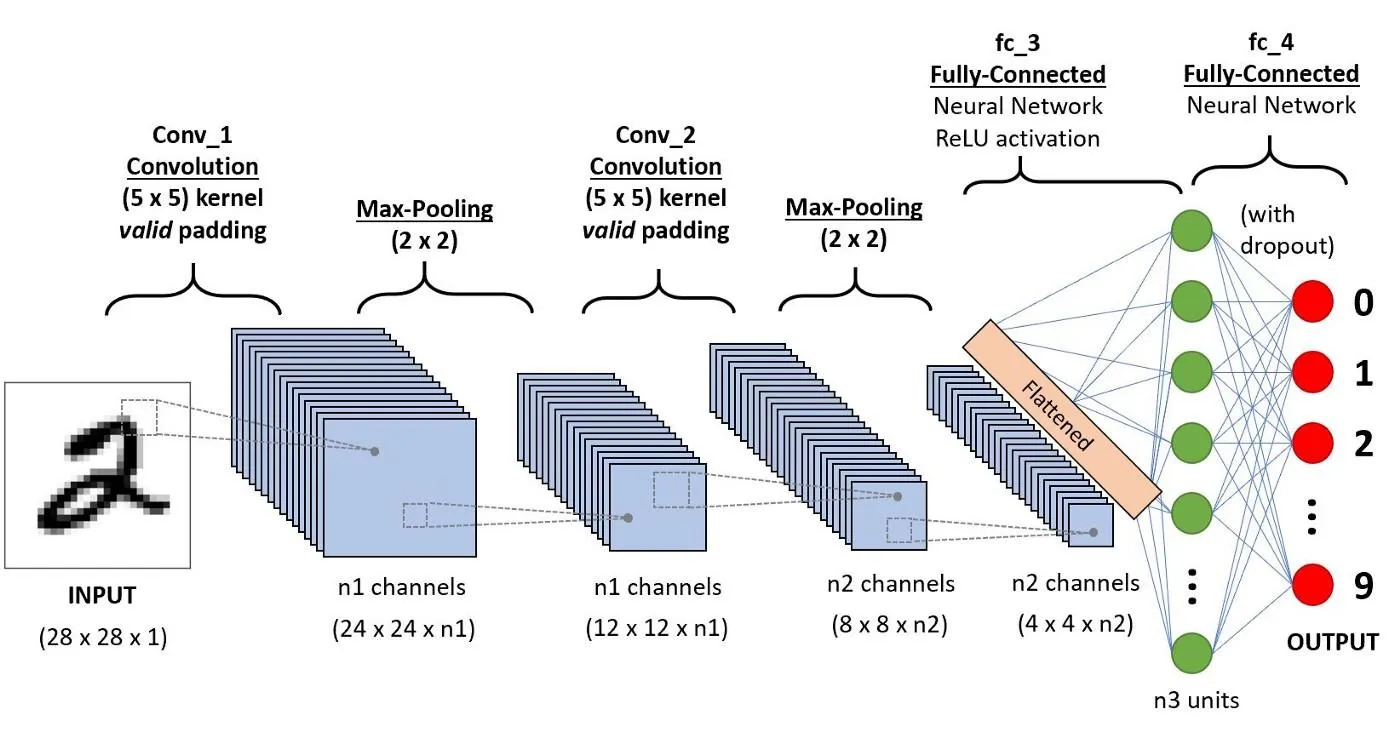

A CNN consists of input layers, hidden layers, pooling layers, fully connected layer, and output layer.

A CNN applies filters layers on the top of the input image to collect the important information carried by the pixels. Filter size can be selected as (3*3) or (5*5). Max pooling layer collects the highest pixel value from each filter we generated. This process will be continued and connected as a one-dimensional array called a fully connected layer. Thus a one-dimensional array can be given input to ANN followed by hidden layers and output layers.

Developing Convolutional Neural Network

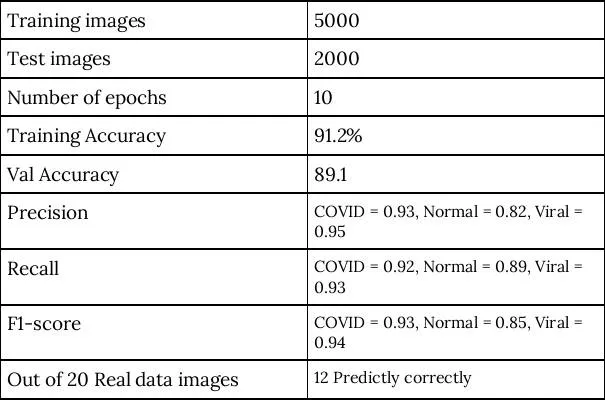

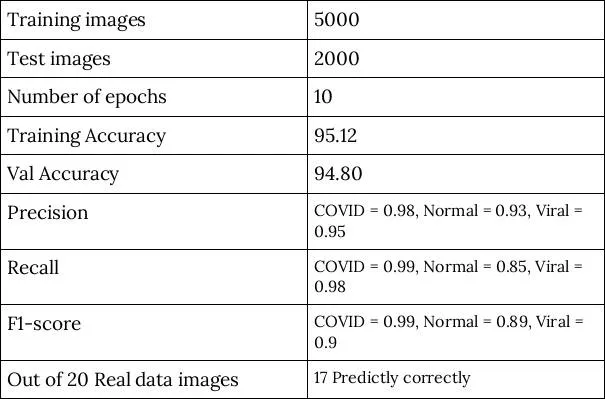

For developing an accurate CNN model without using fine-tuning techniques we applied 3 filter layers and each filter as size (2*2), 3 max-pooling layers of size(2*2), and using strides(2*2).

For training the model on three different labels we have 5000 images for each label and 2000 images for testing purposes.

The one-dimensional array acts as an input for ANN Architecture with 3 hidden layers with relu activation function and one output layer with a softmax activation function.

Performance metrics

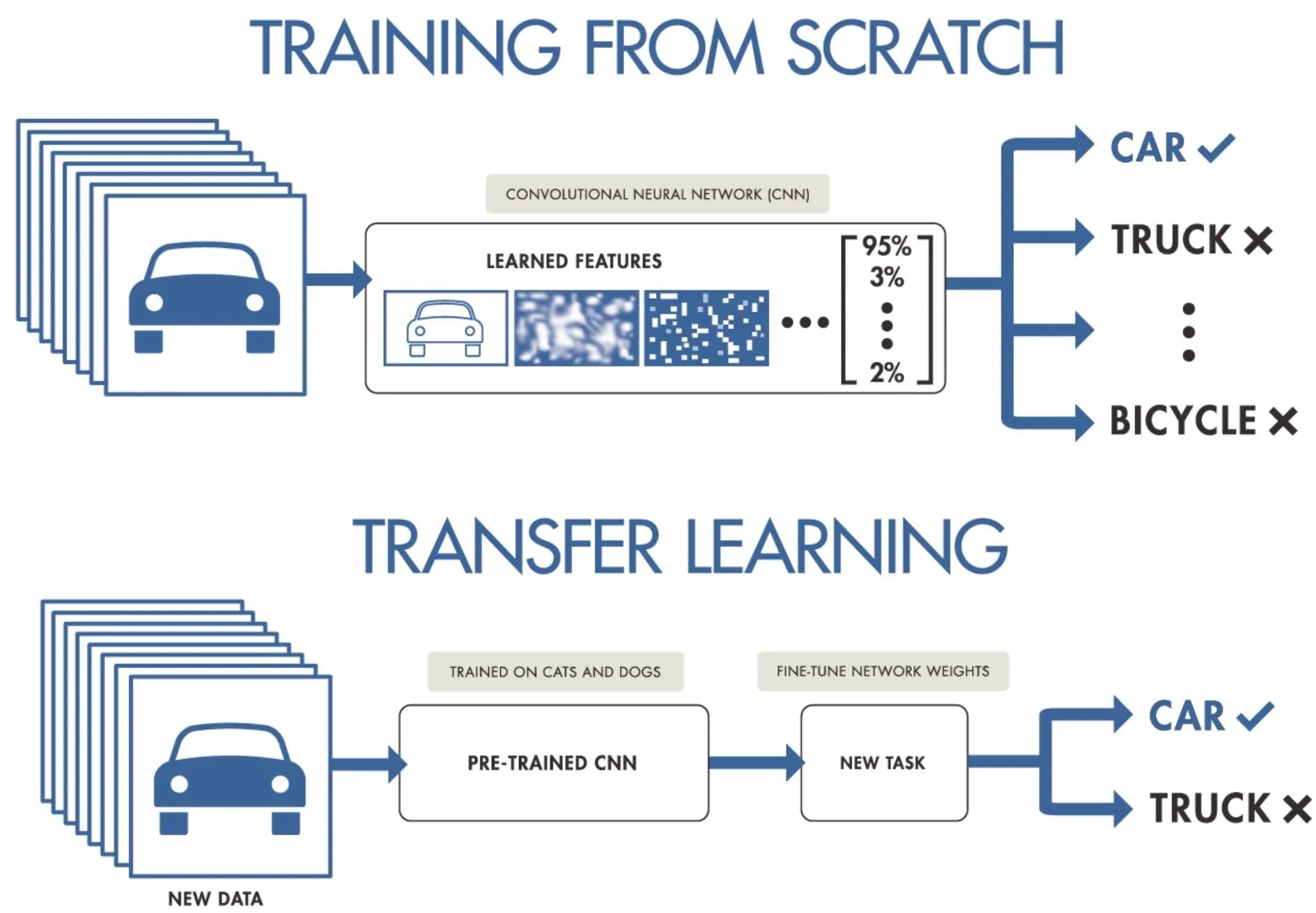

What is Transfer learning CNN Techniques?

Transfer-learning techniques are neural networks trained on a 1000 labels dataset called ImageNet. Around 1.4 million images were used to develop CNN architectures. There are roughly 1.2 million training images, 50,000 validation images, and 150,000 testing images.

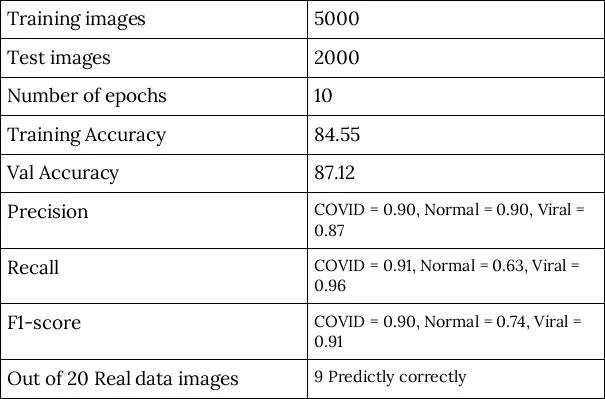

Transfer-learning technique VGG19

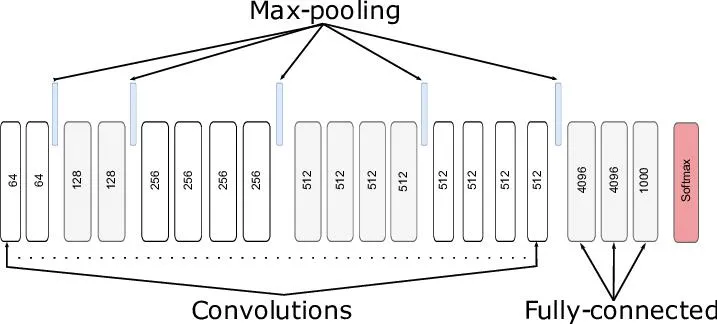

Vgg19 has 24 different layers where 16 convolutional layers and 5 max-pooling layers and 3 fully connected one-dimensional layers and one output layer with a softmax activation function.

Architecture

- Fixed-size of (224 * 224) image was given as input to this network.

- Used kernels of (3 * 3) size with a stride size of 1 pixel, this enabled them to cover the whole notion of the image.

- Strides were used to apply the matrix on the top of filters and the input images.

- max-pooling was performed over 2 * 2-pixel windows with stride

Performance Merics

Transfer-learning Technique ResNet50

ResNet50 has 50 different layers with 48 convolutional layers, 1 max-pooling layer, and 1 Average pool layer. ResNet architectures are known as Residual networks with deep neural networks. ResNet architectures use a concept known as a skip connection. Using skip connection vanishing gradient problem reduced during the time of backpropagation.

Performance Merics

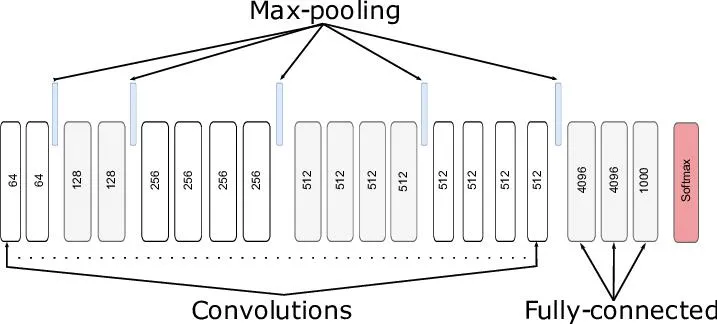

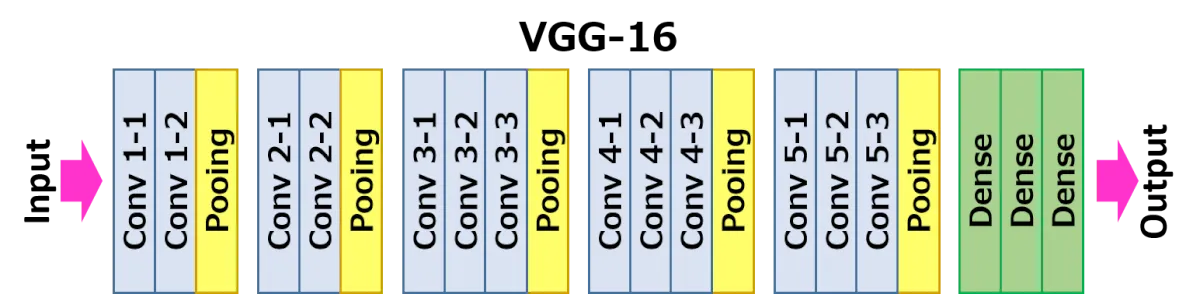

Transfer-Learning Technique VGG16

VGG16 has 21 different layers with 14 convolutional layers, 5 max-pooling layers and 3 hidden layers, and a softmax activation function at the output layer.

In transfer learning techniques we need to make sure the pre-trained weights should not be changed and flatten layers from our data output itself. There is a padding of 1-pixel done after each convolution layer to prevent the spatial feature of the image.

Architecture

- Fixed-size of (224 * 224) image was given as input to this network.

- Used kernels of (3 * 3) size with a stride size of 1 pixel, this enabled them to cover the whole part of the image.

- max-pooling was performed over 2 * 2-pixel windows with stride.

- The final layer has 3 outcomes where we apply softmax and find the results.

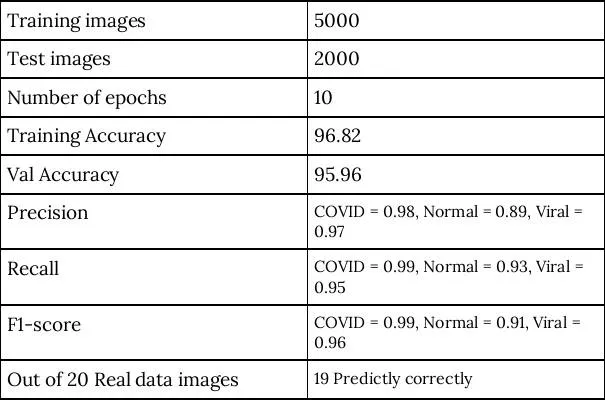

Performance Metrics

Finally using fine-tuning VGG16, VGG19, and ResNet50 deep learning techniques in terms of extracting information from the data it was observed that VGG16 performed well in classifying covid, Normal, and viral pneumonia. However, excellence in high performance remained besides VGG16 with high precision and high model accuracy.