Introduction

Agents stand as pivotal components in the realm of Artificial Intelligence (AI), serving as autonomous entities tasked with executing functions, making decisions, and interacting with their environment. Essentially, an agent embodies a computational system empowered to operate independently, utilizing its sensory input and objectives to navigate towards predefined goals.

The Power of Multiple Agents

Incorporating multiple agents within a single Large Language Model (LLM) application holds promise for yielding superior results. By enabling autonomous action and spontaneous reaction to environmental stimuli, a multi-agent framework enhances adaptability and responsiveness, contributing to more robust outcomes sans external cues.

Addressing Previous Limitations

However, traditional AI applications, particularly those built on LLMs, often grapple with inherent limitations such as the absence of crucial components like memory and contextual awareness. This deficiency often translates to subpar outputs and diminished performance, particularly in contexts demanding complexity, such as enterprise-grade applications.

Introducing LangGraph

LangGraph, the latest innovation from LangChain. Designed to address these shortcomings, LangGraph revolutionizes the landscape of AI agent management. By harnessing cyclic data flows and high-level abstraction, LangGraph empowers developers to construct stateful, multi-actor applications seamlessly integrated with LLMs.

Understanding LangGraph's Advantages

LangGraph enhances agent runtime by incorporating stateful elements and supporting multi-actor

interactions, enabling more sophisticated and context-aware applications. Unlike other AI libraries,

LangGraph's approach prioritises simplicity and ease of use, making it accessible for developers of

all skill levels.

At its core, LangGraph facilitates the creation of multi-agent configurations, unlocking new

dimensions of collaborative problem-solving. Through versatile setups like multi-agent

collaboration, agent supervision, and hierarchical agent teams, LangGraph empowers agents to

collaborate effectively, either by sharing states or functioning autonomously while exchanging final

responses.

Let's delve deeper into these configurations:

- Multi-Agent Collaboration: In multi-agent collaboration, agents share information. Each agent consists of a prompt and an LLM. For instance, consider two agents: one acts as a researcher, extracting data, while the other serves as a chart generator, creating charts based on the extracted information

- Agent Supervision: In this setup, a supervisor agent directs queries to independent agents. For instance, the supervisor calls agent1, which completes its task and sends the final answer back to the supervisor. Essentially, the supervisor is an agent equipped with tools, where each tool is an agent itself.

- Hierarchical Agent Teams:Each agent node operates as if it were a supervisor agent setup itself.

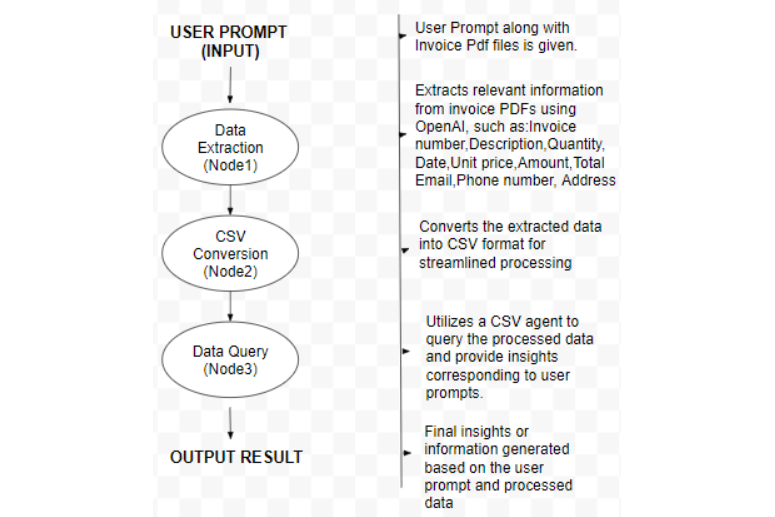

Real-World Application: Streamlining Financial Analysis

Illustrating LangGraph's utility in a real-world context, consider its application in streamlining financial analysis processes. By linking multiple agents within a LangGraph framework, financial professionals can automate the analysis of open and closed order details, leveraging LLMs to extract insights from invoice data effectively.

In practice, this entails a seamless workflow orchestrated through a LangGraph configuration. An end-user prompt triggers a series of interconnected nodes: the first node extracts invoice data using OpenAI, which is then processed into a CSV format by the second node. Finally, the CSV agent, housed within the third node, queries the processed data to fetch relevant insights in response to the user prompt.

Revolutionizing Financial Analysis:

- Increased Efficiency in Financial Reporting: LangGraph's application in financial analysis can significantly enhance the efficiency of reporting processes within industries or companies. By automating tasks such as data extraction from invoices and generating insights, LangGraph enables financial professionals to focus on higher-value activities like decision-making and strategy formulation.

- Enhanced Accuracy and Consistency: Through the use of LangGraph, financial analysis becomes more accurate and consistent. By leveraging LLMs to extract information from invoices, LangGraph ensures that data is processed uniformly and without the risk of human error, leading to more reliable insights and reporting outcomes.

- Cost Reduction and Resource Optimization: Implementing LangGraph for financial analysis can lead to cost savings and resource optimization for organizations. By automating repetitive tasks and streamlining workflows, companies can allocate their financial resources more efficiently, driving down operational costs and improving overall profitability.

Let's visualize this workflow:

- Node 1 - Data Extraction: Utilizing OpenAI, this node extracts relevant information from invoice PDFs.

- Node 2 - CSV Conversion: The extracted data is transformed into CSV format for streamlined processing.

- Node 3 - Data Query: A CSV agent queries the processed data to provide insights corresponding to user prompts.

To illustrate this in practice, let's consider a user prompt: "List dates with the highest total amounts among them." along with a few invoice pdf files . Through the LangGraph, the interconnected agents collaboratively process the query, extract relevant insights from the invoice data, and deliver a concise response, showcasing the efficacy of LangGraph in facilitating complex AI workflows.

CODE

- Step 1: Installing Required Packages

This step install the necessary Python packages needed for the project.

!pip install langgraph pypdf python-dotenv langchain langchain_openai

langchain_experimental

Here, we import the libraries and modules required for data processing, file handling, and interaction with the LangGraph.

from dotenv import load_dotenv

import os

from langchain.llms import OpenAI

from pypdf import PdfReader

from langchain.llms.openai import OpenAI

import pandas as pd

import re

from langchain.prompts import PromptTemplate

from langchain_experimental.agents.agent_toolkits import create_csv_agent

from langchain.chat_models import ChatOpenAI

from langgraph.graph import Graph

# Load environment variables from .env file

load_dotenv()

# Now you can access your environment variables using os.environ

os.environ['OPENAI_API_KEY'] = os.environ.get("OPENAI_API_KEY")

llm1 = OpenAI(temperature=0.2)

Node 1 is responsible for extracting data from PDF files using OpenAI and defines functions to extract text from PDF documents and then use OpenAI to extract specific information based on predefined prompts.

#Extract Information from PDF file

def get_pdf_text(pdf_doc):

text = ""

pdf_reader = PdfReader(pdf_doc)

for page in pdf_reader.pages:

text += page.extract_text()

return text

#Function to extract data from text

def extracted_data(pages_data):

template = """Extract all the following values : invoice no.,

Description, Quantity, date,

Unit price , Amount, Total, email, phone number and address

from this data: {pages}Expected output: remove any dollar symbols

{{'Invoice no.': '1001329','Description': 'Office Chair','Quantity':

'2','Date': '5/4/2023','Unit price': '1100.00','Amount':

'2200.00','Total': '2200.00','Email':

'Santoshvarma0988@gmail.com','Phone number': '9999999999','Address':

'Mumbai, India'}}

"""

prompt_template = PromptTemplate(input_variables=["pages"],

template=template)

full_response=llm1(prompt_template.format(pages=pages_data))

return full_response

Finally state variable is used, which helps to pass the information to all 3 nodes.

# assign AgentState as an empty dict

state = {}

# messages key will be assigned as an empty array. We will append new

messages as we pass along nodes.

state["messages"] = []

def create_docs(state):

messages = state['messages']

user_input = messages[-1]

# To create a data frame , with these column name

dframe = pd.DataFrame({'Invoice no.': pd.Series(dtype='str'),

'Description': pd.Series(dtype='str'),

'Quantity': pd.Series(dtype='str'),

'Date': pd.Series(dtype='str'),

'Unit price': pd.Series(dtype='str'),

'Amount': pd.Series(dtype='int'),

'Total': pd.Series(dtype='str'),

'Email': pd.Series(dtype='str'),

'Phone number': pd.Series(dtype='str'),

'Address': pd.Series(dtype='str')

})

#List of paths to your local PDF files

user_pdf_list

=["/content/invoice_pdf/invoice_2001321.pdf","/content/invoice_pdf/invo

ice_3452334.pdf"]

# Iterate through the pdf file to pull out the data from it

for filename in user_pdf_list:

raw_file_pdfdata=get_pdf_text(filename)

llm_extract_data=extracted_data(raw_file_pdfdata)

pattern_sequence = r'{(.+)}'

match_words = re.search(pattern_sequence, llm_extract_data,

re.DOTALL)

data_dict={}

if match_words:

extracted_text = match_words.group(1)

# Converting the extracted text to a dictionary

data_dict = eval('{' + extracted_text + '}')

data_dict = {key: [value] for key, value

in data_dict.items()}

print(data_dict)

else:

print("No match found.")

dframe=pd.concat([dframe, pd.DataFrame(data_dict)],

ignore_index=True)

dframe.head(7)

return dframe

Node 2 saves the extracted data from Node 1 into a CSV file for further processing.

It defines a function to convert the extracted data into a DataFrame and then save it to a CSV file.

# Node to save the llm extracted data into csv

def save_csv(dframe):

path=r"/content"

data_as_csv=dframe.to_csv(os.path.join(path,r'invoice_details.csv

'),index=False)

return state

Node 3 retrieves data from the CSV file generated by Node 2 based on user prompts.

It defines a function to query the CSV file using LangChain's CSV agent and return the results.

def query_csv(state):

messages = state['messages']

user_input = messages[-1]

invoice_csv_path=r"/content/invoice_details.csv"

agent_executer=create_csv_agent(llm1,invoice_csv_path,verbose=True)

return agent_executer.invoke(user_input)

This step creates a workflow using LangGraph, connecting the three nodes defined above in a sequence to form a complete data processing pipeline.

# Define a Langchain graph

workflow = Graph()

workflow.add_node("agent_1", create_docs)

workflow.add_node("node_2", save_csv)

workflow.add_node("responder", query_csv)

workflow.add_edge('agent_1', 'node_2')

workflow.add_edge('node_2','responder')

workflow.set_entry_point("agent_1")

workflow.set_finish_point("responder")

app = workflow.compile()

Finally, the LangGraph application is invoked with user inputs to execute the defined workflow and obtain the desired output.

inputs = {"messages": ["list date which have greatest Amount among

them?"]}

state=inputs

app.invoke(inputs)

User Prompt:

This is an example of a user prompt given to the LangGraph application.

List the date which have greatest Amount among them?

Output:

This is the output generated by the LangGraph application based on the user prompt.

{'input': 'list the date which have greatest Amount among them?', 'output': '5/5/2023'}

Conclusion

In conclusion, LangGraph emerges as a transformative tool in the arsenal of AI developers, offering a robust platform for constructing advanced LLM applications. Through its intuitive interface and versatile configurations, LangGraph empowers developers to harness the full potential of AI agents, driving innovation and efficiency across diverse domains.