Introduction

In the fast-paced world of sales, every conversation is a potential turning point. Sales executives spend hours analyzing sales calls, extracting insights, and summarizing their content to improve their strategies. Manual call evaluation is both time-consuming and subject to human error, making it a prime candidate for automation through artificial intelligence. In this blog, we'll explore the need for AI in sales call evaluation and how technologies like OpenAI Whisper, Pyannote-Audio, and Pretrained Speaker Embeddings, such as SpeechBrain/spkrec-ecapa-voxceleb, are transforming the sales landscape.

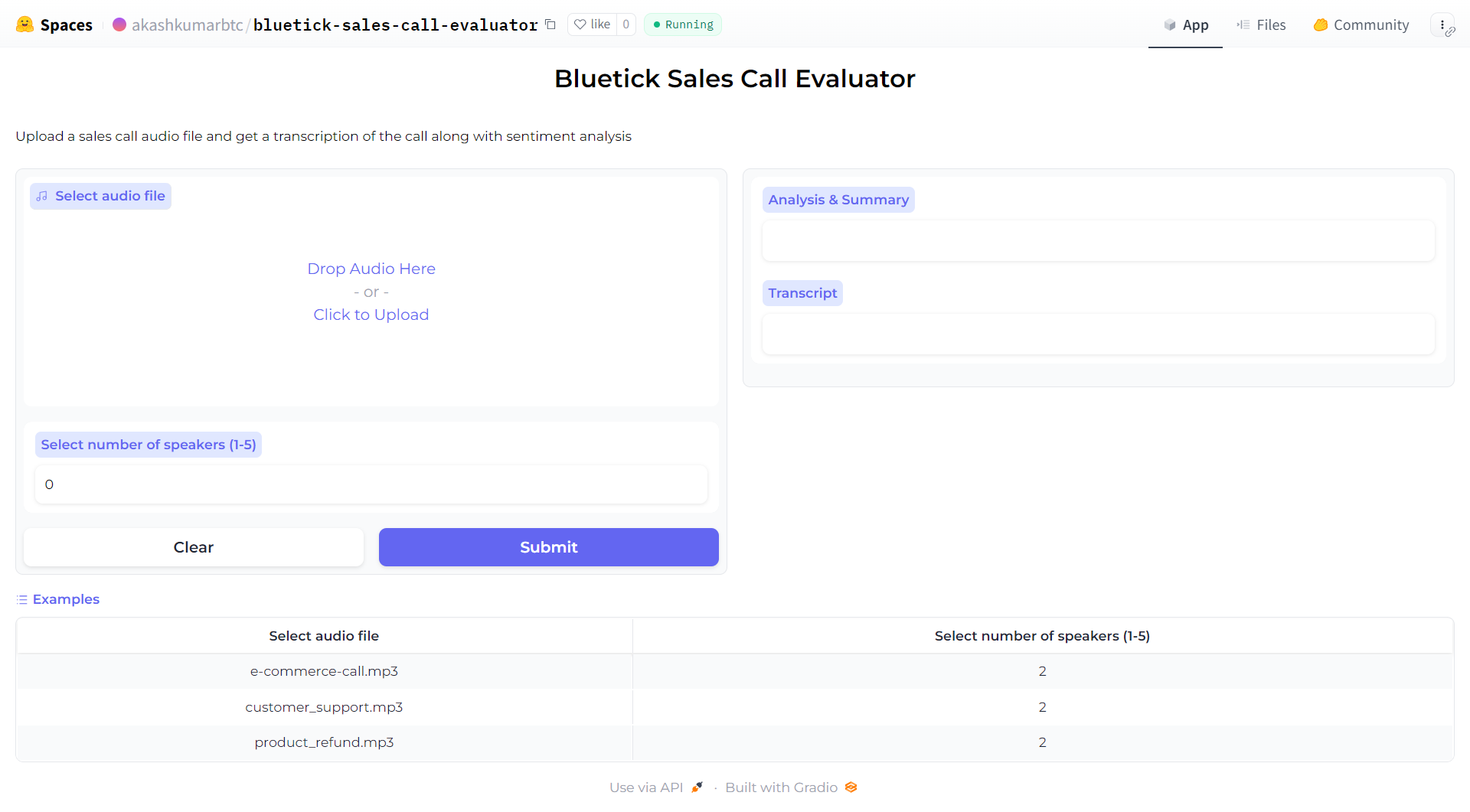

*Kindly be aware that this application is currently running on a CPU server for demonstration purposes. Processing times may extend to a minute or two.

** To utilize preloaded audio files, simply choose from the available examples.

The Need for AI in Sales Call Evaluation

Sales call evaluation is a critical part of a sales team's success. It helps identify what went right and what could be improved, ultimately leading to better sales strategies and more successful interactions with customers. However, manually listening to and summarizing sales calls is a time-consuming process that can be significantly optimized by leveraging generative AI tools.

Consider a scenario where a sales executive spends an average of 10 minutes manually listening to a sales call and writing a summary of the key points. In a busy workday, this can add up to hours of valuable time that could be better utilized for client interactions, strategizing, and other essential tasks. This is where AI-driven tools like the Bluetick Sales Call Evaluator come into play, streamlining the process and making it more efficient.

Key Components used:

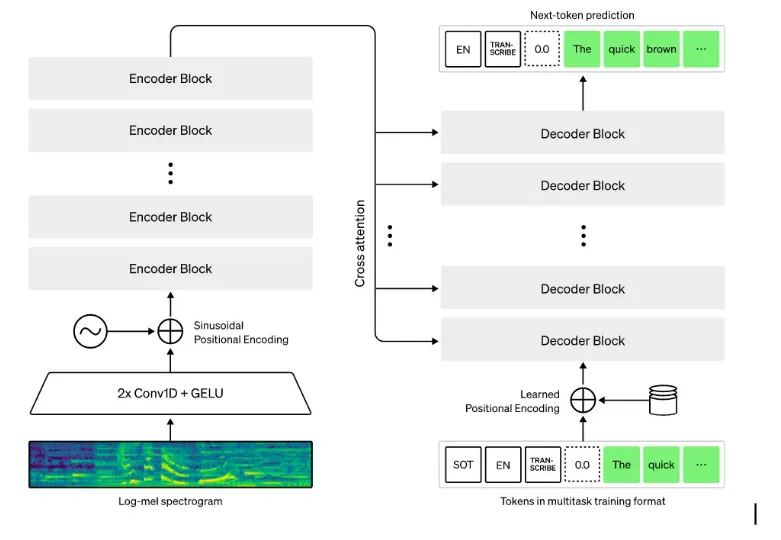

1. OpenAI Whisper

OpenAI's Whisper is a state-of-the-art automatic speech recognition (ASR) system that has opened up new horizons in the field of natural language processing. Whisper excels at transcribing spoken language into written text, making it a valuable asset for sales call evaluation. By transcribing sales calls accurately and efficiently, Whisper lays the foundation for more advanced AI-driven analysis.

Sales teams can use Whisper to automatically generate text transcripts of sales calls, enabling them to quickly search for specific keywords or phrases, identify important moments, and gain a better understanding of the conversation's context. This not only saves time but also ensures that no crucial details are missed.

2. Pyannote-Audio for Speaker Diarization

Pyannote-Audio is an open-source toolkit designed for the analysis of audio data. One of its standout features is speaker diarization, a process that divides a conversation into segments, each associated with a specific speaker. In sales call evaluation, this technology allows sales teams to identify who said what during a call, which is crucial for understanding the dynamics of the conversation.

Speaker diarization provided by Pyannote-Audio is a game-changer for sales executives. It allows them to attribute specific statements to either the salesperson or the customer, aiding in the identification of effective communication strategies, clarifying misunderstandings, and enhancing customer relationships.

3. Pretrained Speaker Embeddings - SpeechBrain/spkrec-ecapa-voxceleb

Pretrained speaker embeddings, such as those provided by SpeechBrain/spkrec-ecapa-voxceleb, are the icing on the cake when it comes to sales call evaluation. These embeddings are trained on vast amounts of data, allowing the AI model to understand and recognize distinct speaker characteristics.

Sales teams can use pretrained speaker embeddings to identify speakers, track customer interactions over time, and assess the effectiveness of different sales representatives. This insight can lead to more personalized sales strategies, better-targeted customer engagement, and ultimately, higher conversion rates.

Let's dive right into the coding

Install all the dependencies

pip install git+https://github.com/openai/whisper.git -q

pip install -q git+https://github.com/pyannote/pyannote-audio

pip install --quiet openai

pip install textstat -q

Download and load the whisper model

from whisper import _download, _MODELS

_download(_MODELS["medium"], "/models/", False)

100%|██████████████████████████████████████| 1.42G/1.42G [00:13<00:00,

113MiB/s] '/models/medium.pt

import whisper

model = whisper.load_model('/models/medium.pt')

Convert the mp3 audio to .wav

To use this audio click here

import wave

import subprocess

audio = "/content/customer_support.mp3"

if audio[-3:] != 'wav':

audio_file_name = audio.split("/")[-1]

audio_file_name = audio_file_name.split(".")[0] + ".wav"

subprocess.call(['ffmpeg', '-i', audio, audio_file_name, '-y'])

path = audio_file_name

Transcribe the audio

result = model.transcribe(path)

segments = result["segments"]

print(segments)

[{'id': 0, 'seek': 0, 'start': 0.0, 'end': 5.48, 'text': " Thank you for calling Martha's Flowers Towne SST. Hello I'd like to order flowers"},

{'id': 1, 'seek': 0, 'start': 5.48, 'end': 9.040000000000001, 'text': " and I think you have what I'm looking for. I'd be happy to take care of your"} …

Load the embedding model and perform diarization

import contextlib

with contextlib.closing(wave.open(path,'r')) as f:

frames = f.getnframes()

rate = f.getframerate()

duration = frames / float(rate)

from pyannote.audio.pipelines.speaker_verification import

PretrainedSpeakerEmbedding

import torch

embedding_model = PretrainedSpeakerEmbedding(

"speechbrain/spkrec-ecapa-voxceleb",

device=torch.device("cuda"))

from pyannote.audio import Audio

from pyannote.core import Segment

import numpy as np

audio = Audio()

def segment_embedding(segment):

start = segment["start"]

# Whisper overshoots the end timestamp in the last segment

end = min(duration, segment["end"])

clip = Segment(start, end)

waveform, sample_rate = audio.crop(path, clip)

waveform = waveform.mean(dim=0, keepdim=True)

return embedding_model(waveform.unsqueeze(0))

embeddings = np.zeros(shape=(len(segments), 192))

for i, segment in enumerate(segments):

embeddings[i] = segment_embedding(segment)

embeddings = np.nan_to_num(embeddings)

Perform diarization

from sklearn.cluster import AgglomerativeClustering

num_speakers = 2 # Set the number of speakers manually

clustering = AgglomerativeClustering(num_speakers).fit(embeddings)

labels = clustering.labels_

for i in range(len(segments)):

segments[i]["speaker"] = 'SPEAKER '+str(labels[i]+1)

import datetime

def time(secs):

return datetime.timedelta(seconds=round(secs))

conversation = ""

for (i, segment) in enumerate(segments):

if i == 0 or segments[i - 1]["speaker"] != segment["speaker"]:

conversation += "\n" + segment["speaker"] + ' ' +

str(time(segment["start"])) + '\n'

conversation += segment["text"][1:] + ' '

print(conversation)

- SPEAKER 1 0:00:00 Thank you for calling Martha's Flowers Towne SST. Hello I'd like to order flowers

- SPEAKER 2 0:00:05 and I think you have what I'm looking for. I'd be happy to take care of your

- SPEAKER 1 0:00:09 order may have your name please. Randall Thomas. Randall Thomas can you spell that for me?

-

SPEAKER 2 0:00:14 Randall R-A-N-B-A-L-L Thomas T-H-O-M-A-S.

.. .

Perform Analysis with AI

Perform Sentiment Analysis

import nltk

from nltk.sentiment.vader import SentimentIntensityAnalyzer

nltk.download('vader_lexicon')

# Perform sentiment analysis

sid = SentimentIntensityAnalyzer()

sentiment_scores = sid.polarity_scores(conversation)

print(f"Sentiment Analysis:\nPositive: {sentiment_scores['pos']} |

Negative: {sentiment_scores['neg']} | Neutral:

{sentiment_scores['neu']}\n\n")

- Sentiment Analysis: Positive: 0.183 | Negative: 0.037 | Neutral: 0.78

Calculate the Flesch-Kincaid readability score

import textstat

# Calculate the Flesch-Kincaid readability score

readability_score = textstat.flesch_reading_ease(conversation)

print(f"Readability Score(Flesch-Kincaid):{readability_score}\n\n")

- Readability Score (Flesch-Kincaid): 88.33

Generate call summary

import openai

openai.api_key = ""

messages=[

{

"role": "system",

"content": """

You will be provided with a conversation. Your task is to give

a summary and mention all the main details in bullet points.

Replace speaker 1 and speaker 2 with sales excutive or comapny

name and customer name if available.

"""

},

{

"role": "user",

"content": conversation

}

]

response = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=messages,

temperature=0,

max_tokens=256,

top_p=1,

frequency_penalty=0,

presence_penalty=0

)

print(response["choices"][0]["message"]["content"])

- Customer wants to order flowers from Martha's Flowers Towne SST

- Customer's name is Randall Thomas

- Customer's phone number is 409-866-5088

- Customer's email address is Randall.Thomas@gmail.com

- Customer's shipping address is 6800 Gladys Avenue, Beaumont Texas, zip code 77706

- Customer wants to purchase one dozen long stem red roses - Total amount of the order is $40

- Customer wants the roses to be delivered within 24 hours - Customer doesn't need any further assistance

- Customer thanks Martha's Flowers and ends the call

Conclusion

The integration of generative AI technologies like OpenAI Whisper, Pyannote-Audio, and Pretrained Speaker Embeddings is revolutionizing the way sales calls are evaluated. By automating the transcription, diarization, and speaker recognition processes, these tools save sales executives valuable time and provide deeper insights into the dynamics of their sales calls. In a highly competitive sales environment, where every interaction counts, embracing AI-driven solutions can be a game-changer. As technology continues to advance, we can expect even more innovative AI solutions to shape the future of sales call evaluation and, ultimately, sales success.

- Making Every Conversation Count.