Introduction

Welcome to our exploration of the fascinating world of large language models! As many of you are aware, the scale of these models has skyrocketed recently. Take, for instance, GPT-4, which boasts a staggering 1.8 trillion parameters. The desire to fine-tune these behemoths with custom datasets is growing, yet it poses significant challenges. Fine-tuning requires adjusting countless parameters, which is time-consuming, and the GPU demands are nothing short of immense. Today, we'll delve into strategies for handling these colossal models and focus on LORA, a renowned technique for parameter-efficient fine-tuning.

Precision & Quantization

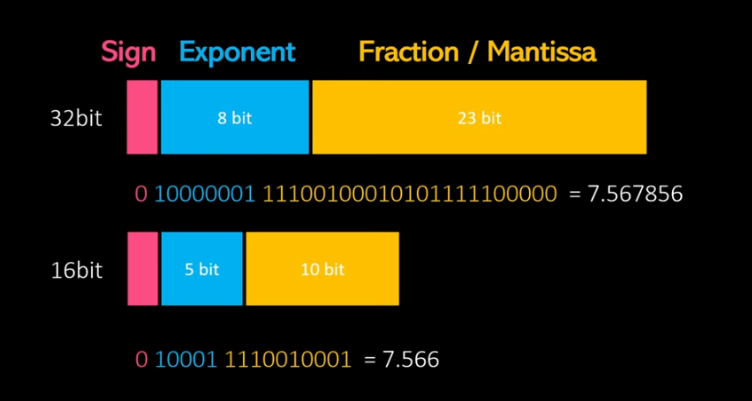

Before we proceed, let's brush up on some key concepts: precision and quantization. Neural networks' weight matrices comprise floating-point numbers, typically stored in a float32 data type. But what does this mean in practice?

In the realm of computer science, we learn that computers represent floating-point numbers using binary digits, allocating specific bits for the sign, exponent, and fraction.

For example, the number 7.5, along with additional digits, can be represented in 32 bits. An emerging approach to manage these large datasets is to reduce precision, such as switching to half-precision, which uses half the bits for number representation. This method cuts memory requirements but at the cost of precision, as demonstrated by the inability to represent as many digits compared to the 32-bit format.

Quantization

Though reducing precision can lead to rounding errors and accumulation of inaccuracies, an exciting trend in this field is quantization. Quantization allows for extremely low levels of precision, sometimes using only integers, while still maintaining model performance. Unlike simple bit reduction, quantization involves calculating a factor to preserve precision levels.For example, consider converting a half-precision matrix to int8 using quantization. While the specifics of various quantization techniques are a topic for another day, the key takeaway is that it's feasible to shrink model sizes by lowering precision. A recent groundbreaking study involving 35,000 experiments found that 4-bit quantization is almost universally optimal. This finding is crucial, as reduced precision not only saves memory but also accelerates training on most GPUs.

When we halve the precision, we can potentially double the training speed in terms of FLOPS (floating point operations per second), a benchmark for comparing hardware speeds. This increase means hardware can execute matrix multiplications faster, a critical component of deep learning.

In summary, two effective methods to make enormous models more manageable are precision reduction and quantization. These techniques not only improve data handling but also enhance training efficiency.

LoRA: Low-Rank Adaptation of Large (Language) Models

LoRA - Low-Rank Adaptation, a groundbreaking fine-tuning technique developed by Microsoft researchers. LoRA has made waves in the world of large models, offering a tailored approach to model adaptation. Although it was tested using GPT-3 and primarily discussed in the context of language models and NLP tasks, LoRA's versatility is its standout feature. This method is readily applicable across various models in different domains.

Why LoRA Makes a Difference

You might wonder, why LoRA? Why not just tweak the entire model? Here's the insight: Large models, whether they're language, audio-linguistic like Whisper, or visual-focused, are designed to grasp the general essence of their respective domains. They're adept at a broad spectrum of tasks, delivering decent zero-shot accuracy. However, when it comes to honing these models for specific tasks or datasets, the focus narrows. Only a select set of features need to be adjusted or relearned. This is where LoRA shines. It posits that the update matrix (ΔW) for such a task can be low-rank.

Understanding Rank Adaptation and Its Implications

But what does "low-rank adaptation" really mean? Simply put, the rank of a matrix indicates the number of independent row or column vectors it contains. In this context, it's the minimum number needed. This concept is crucial because it suggests that there's a low-dimensional reparameterization that is just as effective for fine-tuning as adjusting the full parameter space. In practical terms, this means that downstream tasks don't necessarily require tuning all parameters. A much smaller subset of weights can be transformed to achieve impressive performance.

The Practical Impact of LoRA

The implications are significant. LoRA suggests that large foundational models, which already possess a vast array of learned features and are designed for general purposes, can be fine-tuned on a surprisingly small number of parameters while still delivering strong performance. This approach not only makes the fine-tuning process more efficient but also more accessible, as it reduces the computational resources required.

In essence, LoRA is a game-changer in the world of model fine-tuning, offering a smarter, more resource-efficient path to customizing large models for specific tasks.

What is PEFT?

Parameter-Efficient Fine-Tuning (PEFT) is an innovative technique in the field of Natural Language Processing (NLP). It's designed to enhance the performance of pre-trained language models for specific tasks, all while conserving computational resources and time. The core idea behind PEFT is to leverage the existing parameters of a pre-trained model, fine-tuning them on a smaller, task-specific dataset. This approach stands in contrast to training an entire model from scratch, offering significant savings in terms of computation and time.

PEFT's efficiency stems from its selective fine-tuning strategy. By freezing some layers of the pre-trained model and only adjusting the final few layers tailored to the specific task, PEFT reduces the computational burden. This enables the model to adapt to new tasks with less demand for computational power and fewer labeled data examples. While PEFT is relatively new in NLP, the concept of updating only the last layer of models has been a staple in computer vision since the advent of transfer learning.

Distinguishing PEFT from Traditional Fine-Tuning

Understanding the difference between fine-tuning and parameter-efficient fine-tuning is crucial. Traditional fine-tuning involves taking a pre-trained model and training it further on a new dataset for a different task. This process typically includes retraining all layers and parameters of the model, which can be computationally intensive and time-consuming, particularly for large-scale models.

Parameter-efficient fine-tuning, on the other hand, is a more targeted approach. It concentrates on updating only a select subset of a pre-trained model's parameters deemed crucial for the new task. By focusing on these key parameters, PEFT significantly reduces the computational load and time required for fine-tuning, making it a more efficient option.

In essence, while traditional fine-tuning remodels the entire structure, PEFT is like renovating only the essential parts of a building to serve a new purpose, preserving the foundational architecture yet adapting it to new requirements with minimal disruption and resource expenditure.

Now let's look at the Code

Installing Necessary Libraries

Firstly, we need to install some key libraries. We'll use trl (for reinforcement learning-based training), transformers, and accelerate from Hugging Face, along with the PEFT library. We also need additional tools like datasets, bitsandbytes for efficient training, einops for tensor operations, and wandb for experiment tracking.

Run these commands in your Python environment to install the libraries:

!pip install -q -U trl transformers accelerate git+https://github.com/huggingface/peft.git

!pip install -q datasets bitsandbytes einops wandb

Loading and Preparing the Data

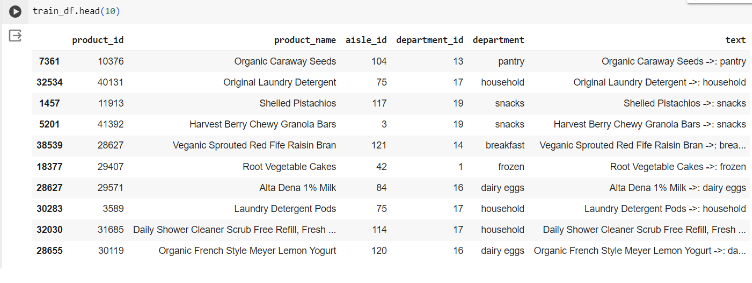

Now, let's load our data from CSV files. We'll use two datasets: products.csv and departments.csv. Our goal is to merge these datasets and prepare them for training.

import pandas as pd

df_product = pd.read_csv("/content/products.csv")

df_dept = pd.read_csv('/content/departments.csv')

df_joined = pd.merge(df_product, df_dept, on = ['department_id'])

df_joined['text'] = df_joined.apply(lambda row: row['product_name'] + " ->:

" + row['department'], axis = 1)

from sklearn.model_selection import train_test_split

train_df, test_df = train_test_split(df_joined, test_size=0.2,

random_state=42)

train_df.head(10)

from datasets import Dataset,DatasetDict

train_dataset_dict = DatasetDict({

"train": Dataset.from_pandas(train_df),

})

Finally, convert the training DataFrame into a format suitable for the Hugging Face datasets library:

train_dataset_dict

DatasetDict({

train: Dataset({

features: ['product_id', 'product_name', 'aisle_id', 'department_id', 'department', 'text', '__index_level_0__'],

num_rows: 39750

})

})

Loading the Pre-Trained Model

To begin with, we need to load our pre-trained model. For this example, we'll use a specific model from the Hugging Face repository. Ensure you have PyTorch and the transformers library installed.

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer,

BitsAndBytesConfig, AutoTokenizer

model_name = "TinyPixel/Llama-2-7B-bf16-sharded"

bnb_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_quant_type="nf4",

bnb_4bit_compute_dtype=torch.float16,

)

model = AutoModelForCausalLM.from_pretrained(

model_name,

quantization_config=bnb_config,

trust_remote_code=True

)

model.config.use_cache = False

tokenizer = AutoTokenizer.from_pretrained(model_name, trust_remote_code=True)

tokenizer.pad_token = tokenizer.eos_token

Testing the Base Model Predictions Before Fine-Tuning

Let's see how the model performs before we fine-tune it. We'll use the transformers pipeline for text generation:

import transformers

pipeline = transformers.pipeline(

"text-generation",

model=model,

tokenizer=tokenizer,

torch_dtype=torch.bfloat16,

trust_remote_code=True,

device_map="auto",

)

sequences = pipeline(

[""Free & Clear Stage 4 Overnight Diapers" ->:","Bread Rolls ->:","French

Milled Oval Almond Gourmande Soap ->:"],

max_length=200,

do_sample=True,

top_k=10,

num_return_sequences=1,

eos_token_id=tokenizer.eos_token_id,

)

for seq in sequences:

print(f"Result: {seq[0]['generated_text']}")

Setting Up LoRA for Fine-Tuning

Now, let's configure LoRA for our fine-tuning process:

from peft import LoraConfig, get_peft_model

lora_alpha = 16

lora_dropout = 0.1

lora_r = 64

peft_config = LoraConfig(

lora_alpha=lora_alpha,

lora_dropout=lora_dropout,

r=lora_r,

bias="none",

task_type="CAUSAL_LM",

target_modules=["q_proj","v_proj"]

)

from transformers import TrainingArguments

output_dir = "./results"

per_device_train_batch_size = 4

gradient_accumulation_steps = 4

optim = "paged_adamw_32bit"

save_steps = 10

logging_steps = 1

learning_rate = 2e-4

max_grad_norm = 0.3

max_steps = 120

warmup_ratio = 0.03

lr_scheduler_type = "constant"

training_arguments = TrainingArguments(

output_dir=output_dir,

per_device_train_batch_size=per_device_train_batch_size,

gradient_accumulation_steps=gradient_accumulation_steps,

optim=optim,

save_steps=save_steps,

logging_steps=logging_steps,

learning_rate=learning_rate,

fp16=True,

max_grad_norm=max_grad_norm,

max_steps=max_steps,

warmup_ratio=warmup_ratio,

group_by_length=True,

lr_scheduler_type=lr_scheduler_type,

)

Configuring Training Parameters

Lastly, we'll set up the training arguments for our fine-tuning process:

from trl import SFTTrainer

max_seq_length = 512

trainer = SFTTrainer(

model=model,

train_dataset=train_dataset_dict['train'],

peft_config=peft_config,

dataset_text_field="text",

max_seq_length=max_seq_length,

tokenizer=tokenizer,

args=training_arguments,

)

trainer.train()

Testing the Fine-Tuned Model

Preparing Test Data

First, we need to prepare our test data. From the test_df DataFrame, we'll extract product names and create a shorter list for a quick test. In this case, we're taking a sample of three items:

lst_test_data = list(test_df['product_name'])

sample_size = 3

lst_test_data_short = lst_test_data[:sample_size]

lst_test_data_short

['Free & Clear Stage 4 Overnight Diapers', 'Beef pot roast with roasted potatoes, carrots, sweet onions, green beans, and a rich gravy Beef Pot Roast', 'Coffee Liquer']

import transformers

pipeline = transformers.pipeline(

"text-generation",

model=model,

tokenizer=tokenizer,

# torch_dtype=torch.bfloat16,

torch_dtype=torch.float16,

trust_remote_code=True,

device_map="auto",

)

sequences = pipeline(

lst_test_data_short,

max_length=100, #200,

do_sample=True,

top_k=10,

num_return_sequences=1,

eos_token_id=tokenizer.eos_token_id,

)

for ix,seq in enumerate(sequences):

print(ix,seq[0]['generated_text'])

“The vegan diet is not just about animal welfare. I think it's a diet that makes you feel much better, too. It’s more about a healthy mind in a healthy body. The vegan foods I eat are healthy and full of flavor and taste. I’m a big fan of veganism.”

- Paul McCartney, The Beatles

It was a bad day for the Brewers (15-17), who have lost three straight and four of five, with their only victory coming at home against the Cincinnati Reds on Tuesday.

Its a big game for us, said manager Ned Yost. …

| Prompt | Before Tuning | After Tuning |

|---|---|---|

| Veganic Sprouted Red Fife Raisin Bran | Result: “Veganic Sprouted Red Fife Raisin Bran” ->:

everybody is happy! “The vegan diet is not just about animal welfare. I think it's a diet that makes you feel much better, too. It’s more about a healthy mind in a healthy body. The vegan foods I eat are healthy and full of flavor and taste. I'm a big fan of veganism.” - Paul McCartney, The Beatles … |

Department - breakfast |

| Daily Shower Cleaner Scrub Free Refill, Fresh | Result: Daily Shower Cleaner Scrub Free Refill, Fresh ->:

kwietniu 14, 19:44 It was a bad day for the Brewers (15-17), who have lost three straight and four of five, with their only victory coming at home against the Cincinnati Reds on Tuesday. Its a big game for us, said manager Ned Yost. … |

Department - household |

| Beef pot roast with roasted potatoes, carrots, sweet onions, green beans, and a rich gravy Beef Pot Roast | Result: Beef pot roast with roasted potatoes, carrots, sweet onions, green beans, and a rich gravy Beef Pot Roast ->: 11:45 am-12:45 pm - $450/person. Unterscheidung. It's not just for dinner, it's a meal in a bowl. The beef pot roast is a classic meal that is enjoyed by millions of people all over the world. This is a recipe I made last Christmas for a large gathering of friends and family. … |

Department - frozen |

As we conclude this exploration into the world of Parameter-Efficient Fine-Tuning (PEFT), it's evident that we stand at the cusp of a new era in Natural Language Processing (NLP). PEFT, with its innovative approach to fine-tuning large language models, represents a significant leap forward in making advanced NLP both accessible and sustainable. By focusing on fine-tuning a subset of parameters, this technique not only conserves computational resources but also opens doors to customizing sophisticated models for specific tasks with greater efficiency.

Throughout this blog, we have journeyed from the foundational concepts of PEFT, contrasting it with traditional fine-tuning methods, to its practical implementation. We've delved into setting up the necessary environment, loading pre-trained models, preparing datasets, and the intricacies of applying Low-Rank Adaptation (LoRA) for fine-tuning. The culmination of this process in our model testing phase has showcased the real-world efficacy and potential of PEFT in enhancing model performance.

Fine-tuning is where the art of precision meets the power of language